OS03: Threads

Based on Chapter 2 of [Hai19]

(Usage hints for this presentation)

This presentation is archived and will not receive further updates. Updated presentations on Operating Systems are available in the course IT Systems.

Computer Structures and Operating Systems 2023

Dr. Jens Lechtenbörger (License Information)

1. Introduction

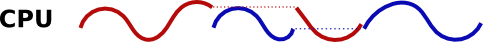

1.1. OS Plan

- OS Overview (Wk 20)

- OS Introduction (Wk 21)

- Interrupts and I/O (Wk 21)

- Threads (Wk 23)

- Thread Scheduling (Wk 24)

- Mutual Exclusion (MX) (Wk 25)

- MX in Java (Wk 25)

- MX Challenges (Wk 25)

- Virtual Memory I (Wk 26)

- Virtual Memory II (Wk 26)

- Processes (Wk 27)

- Security (Wk 28)

1.2. Today’s Core Questions

- What exactly are threads?

- Why and for what are they used?

- How can I inspect them?

- How are they created in Java?

- What impact does blocking or non-blocking I/O have on the use of threads?

- How does switching between threads work?

1.3. Learning Objectives

- Explain thread concept, thread switching, and multitasking

- Including states (after upcoming presentation)

- Explain distinctions between threads and processes

- Explain advantages of a multithreaded organization in structuring applications and in performance

- Inspect threads on your system

- Create threads in Java

- Discuss differences between and use cases for blocking and non-blocking I/O

1.4. Retrieval practice

1.4.1. Informatik 1

What are interfaces and classes in Java, what is “this”?

If you are not certain, consult a textbook; these self-check questions and preceding tutorials may help:

- https://docs.oracle.com/javase/tutorial/java/concepts/QandE/questions.html

- https://docs.oracle.com/javase/tutorial/java/IandI/QandE/interfaces-questions.html

1.4.2. Recall: Stack

- Stack = Classical data structure (abstract data type)

- LIFO (last in, first out) principle

- See Appendix A in [Hai19] if necessary

- Two elementary operations

Stack.Push(o): place objectoon top ofStackStack.Pop(): remove object from top ofStackand return it

- Supported in machine language of most processors (not in Hack,

though)

- Typically (e.g., x86), stack grows towards smaller addresses

- Next object pushed gets smaller address than previous one

- (Differently from stack of VM for Hack platform)

- Typically (e.g., x86), stack grows towards smaller addresses

1.4.3. Drawing on Stack

What's the stack?

Figure © 2016 Julia Evans, all rights reserved; from julia's drawings. Displayed here with personal permission.

1.4.4. Previously on OS …

What is a thread?

Threads!

Figure © 2016 Julia Evans, all rights reserved; from julia's drawings. Displayed here with personal permission.

- What are multitasking and scheduling?

1.4.5. Recall: Blocking vs Non-Blocking I/O

- For

blocking as well as non-blocking I/O,

thread invokes system call

- OS is responsible for I/O

- Blocking I/O: OS initiates I/O and schedules different thread for

execution

- Calling thread is blocked for duration of I/O

- After I/O is finished, OS un-blocks calling thread

- Un-blocked thread to be scheduled later on, with result of I/O system call

- Non-blocking I/O: OS initiates I/O and returns (incomplete) result to calling thread

Table of Contents

2. Threads

2.1. Threads and Programs

- Program vs thread

- Program contains instructions to be executed on CPU

- OS schedules

execution of programs

- By default, program execution starts with one thread

- Thread = unit of OS scheduling = independent sequence of computational steps

- Programmer determines how many threads are created

- (OS provides system calls for thread management, Java an API)

- By default, program execution starts with one thread

- Simple programs are single-threaded

- More complex programs can be multithreaded

- Multiple independent sequences of computational steps

- E.g., an online game: different threads for game AI, GUI events, network handling

- Multi-core CPUs can execute multiple threads in parallel

- Multiple independent sequences of computational steps

2.2. Thread Creation and Termination

- Different OSes and different languages provide different APIs to

manage threads

- Thread creation

- Following example: Java

- [Hai19]: Java and POSIX threads

- Thread termination

- API-specific functions to end/destroy threads

- Implicit termination when “last” instruction ends

- E.g., in Java when methods

main()(for main thread) orrun()(for other threads) end (if at all)

- E.g., in Java when methods

- Thread creation

2.3. Thread Terminology

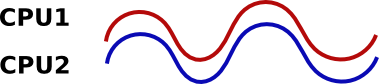

2.3.1. Parallelism

- Parallelism = simultaneous execution

- E.g., multi-core

- Potential speedup for computations!

- (Limited by Amdahl’s law)

- Note

- Processors contain more and more cores

- Individual cores do not become much faster any longer

- Recall CS part about Moore’s law

- Consequence: Need parallel programming to take advantage of current hardware

2.3.2. Concurrency

Concurrency is more general term than parallelism

- Concurrency includes

Parallel threads (on multiple CPU cores)

- (Executing different code in general)

Interleaved threads (taking turns on single CPU core)

- With gaps on single core!

- Challenges and solutions for concurrency apply to parallel

and interleaved executions

- Topics covered in upcoming presentations (mutual exclusion (MX), MX in Java, MX challenges)

- Concurrency includes

2.3.3. Thread Preemption

- Preemption = temporary removal of thread from CPU by OS

- Before thread is finished (with later continuation)

- To allow others to continue after scheduling decision by OS

- Typical technique in modern OSs

- Run lots of threads for brief intervals per second; creates illusion of parallel executions, even on single-core CPU

- Before thread is finished (with later continuation)

- Later slides: Cooperative vs preemptive multitasking

- Upcoming presentation: Thread scheduling

2.3.4. Thread Classification

- I/O bound

- Threads spending most time submitting and waiting for I/O requests

- Run frequently by OS, but only for short periods of time

- Until next I/O operation

- E.g., virus scanner, network server (e.g., web, chat)

- CPU bound

- Threads spending most time executing code

- Run for longer periods of time

- Until preempted by scheduler

- E.g., graph rendering, compilation of source code, training for deep learning

3. Java Threads

3.1. Threads in Java

- Threads are created from instances of classes implementing the

Runnable interface

- Implement

run()method - Create new Thread instance from

Runnableinstance - Invoke

start()method onThreadinstance

- Implement

- Alternatives (beyond the scope of this course)

- Subclass of

Thread(Thread implements Runnable)- If more than

run()overwritten

- If more than

- java.util.concurrent.Executor

- With

Callable<V>,Future<V>and service methods ofExecutor - Virtual threads

- Later slide with some ideas

- With

- Subclass of

3.2. Java Thread Example

public class Simpler2Threads { // Based on Fig. 2.3 of [Hai17] // "Simplified" by removing anonymous class. public static void main(String args[]){ Thread childThread = new Thread(new MyThread()); childThread.start(); sleep(5000); System.out.println("Parent is done sleeping 5 seconds.");} static void sleep(int milliseconds){ // Sleep milliseconds (blocked/removed from CPU). try{ Thread.sleep(milliseconds); } catch(InterruptedException e){ // ignore this exception; it won't happen anyhow }}} class MyThread implements Runnable { public void run(){ Simpler2Threads.sleep(3000); System.out.println("Child is done sleeping 3 seconds."); }}

3.3. Self-Study Task: CPU Usage

This task is available for self-study in Learnweb.

- Compile and run the

source code

of Simpler2Threads and make sure that you

can explain its output. Maybe ask in Learnweb.

- In general, if a program behaves unexpectedly, a debugger

helps to understand what is happening when. Your favorite IDE

probably includes a debugger. Also, several Java implementations

come with a simple debugger called

jdb, e.g., the OpenJDK tools. (The notes on this slide contain sample commands forjdb.)

- In general, if a program behaves unexpectedly, a debugger

helps to understand what is happening when. Your favorite IDE

probably includes a debugger. Also, several Java implementations

come with a simple debugger called

4. Reasons for Threads

4.1. Main Reasons

- Resource utilization

- Keep most of the hardware resources busy most of the time, e.g.:

- While one thread is idle (e.g., waiting for external events such as user interaction, disk, or network I/O), allow other threads to continue

- Keep multiple CPU cores busy

- E.g., OS housekeeping such as zeroing of memory on second core

- Keep most of the hardware resources busy most of the time, e.g.:

- Responsiveness

- Use separate threads to react quickly to external events

- Think of game AI vs GUI

- Other example on later slide: Web server

- Use separate threads to react quickly to external events

- More modular design

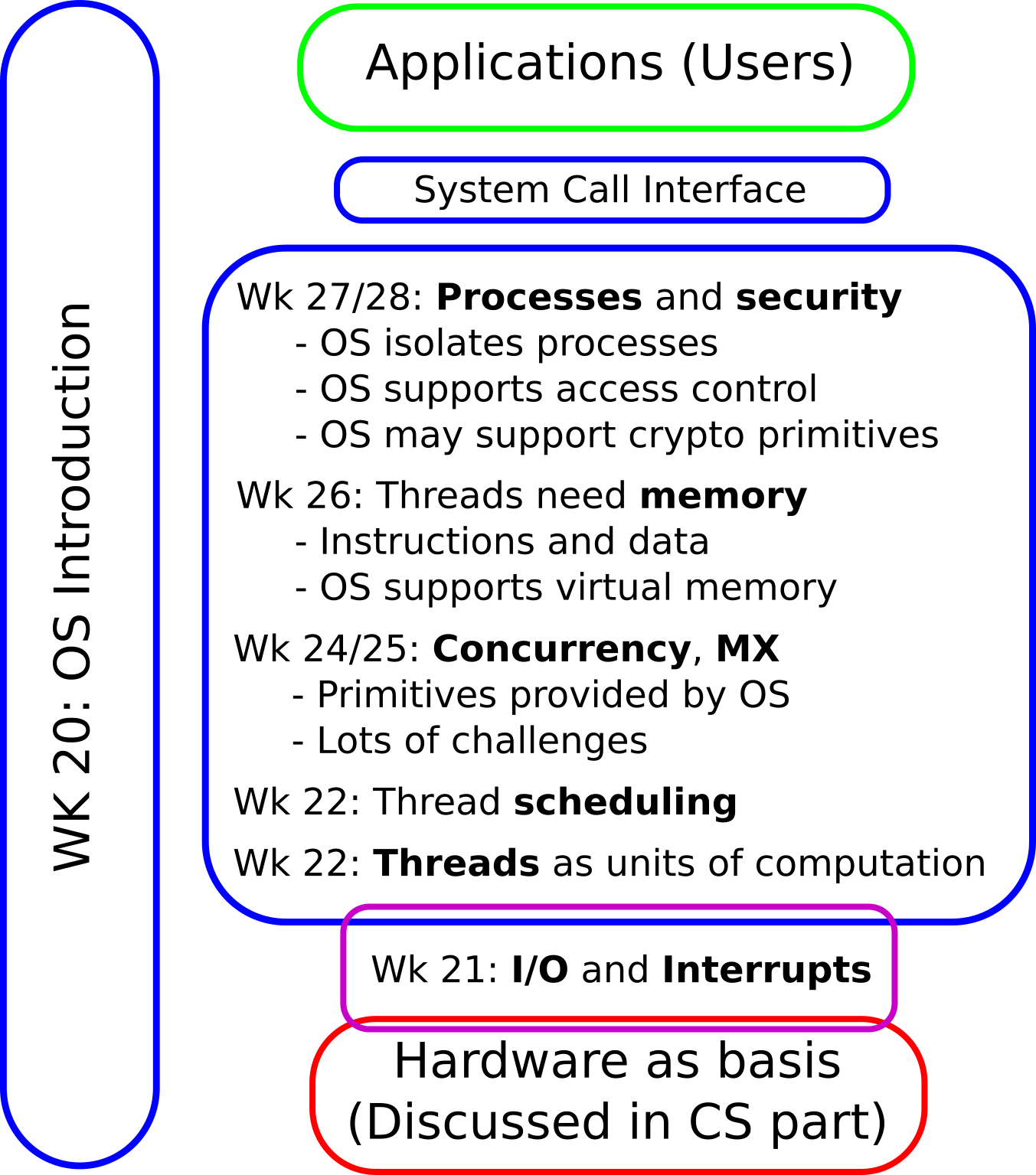

4.2. Interleaved Execution Example

“Interleaved execution example” by Jens Lechtenbörger under CC BY-SA 4.0; SVG image refers to converted and cut parts of Figure 2.6 of a book by Max Hailperin under CC BY-SA 3.0. From GitLab

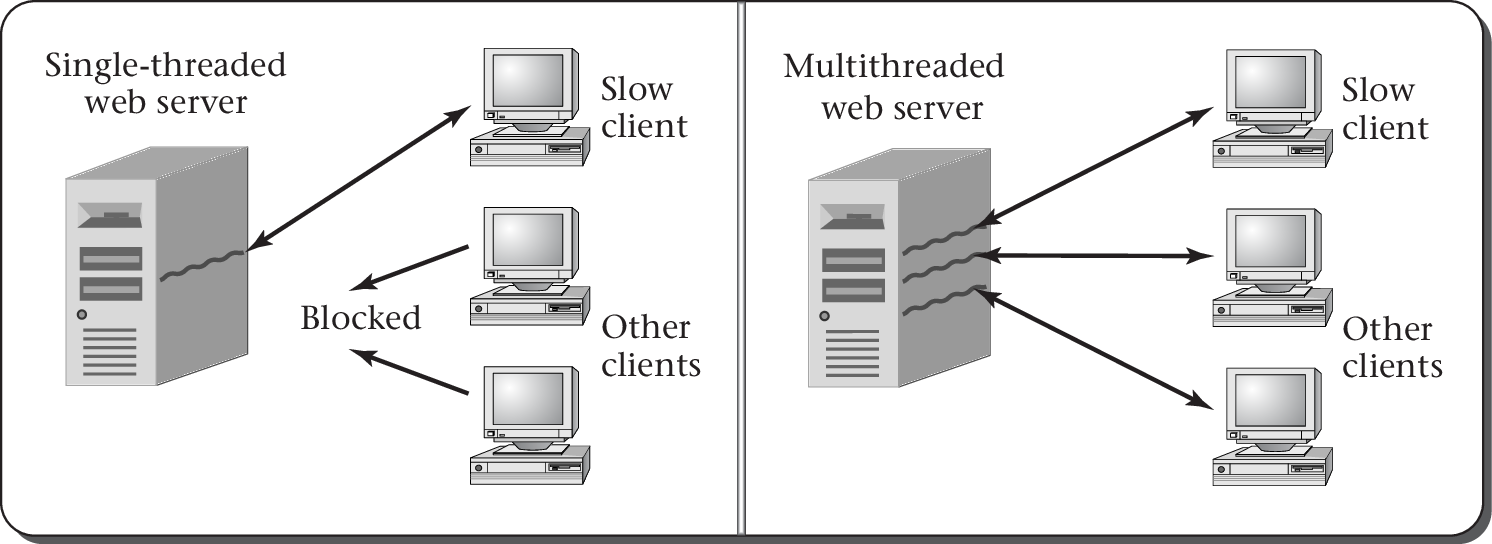

4.3. Example: Web Server

- Web server “talks” HTTP with browsers

- Simplified

- Lots of browsers ask per GET method for various web pages

- Server responds with HTML files

- How many threads on server side?

4.4. Single- vs Multithreaded Web Server

“Figure 2.5 of [Hai19]” by Max Hailperin under CC BY-SA 3.0; converted from GitHub

5. Thread Switching

5.1. Thread Switching for Multitasking

- With multiple threads, OS decides which to execute when

→ Scheduling (later lecture)

- (Similar to machine scheduling for industrial production, which you may know from operations management)

- Recall multitasking

- OS may use time-slicing to schedule threads for short intervals, illusion of parallelism on single CPU core

- After that decision, a context switch takes place

- (Recall introduction and interrupts; now, as thread switch)

- Remove thread A from CPU

- Remember A’s state (in TCB: instruction pointer, register contents, stack, …)

- Dispatch thread B to CPU

- Restore B’s state

5.1.1. Thread Switching with yield

In the following

- First, simplified setting of voluntary switch from thread A to

thread B

- Function

switchFromTo()on next slide- For details, see Sec. 2.4 in [Hai19]

- Leaving the CPU voluntarily is called yielding;

yield()may really be an OS system call

- Function

- Afterwards, the real thing: Preemption by the OS

5.2. Interleaved Instruction Sequence

![Interleaved execution of threads. Based on [[https://github.com/Max-Hailperin/Operating-Systems-and-Middleware--Supporting-Controlled-Interaction/][Figure 2.7 of book by Max Hailperin]], CC BY-SA 3.0.](./figures/OS/3-interleaved-executions.png)

Interleaved execution of threads. Based on Figure 2.7 of book by Max Hailperin, CC BY-SA 3.0.

Figure by Jens Lechtenbörger under CC BY-SA 4.0; from GitLab

5.3. Thread Control Blocks (TCBs)

- All threads share the same CPU registers

- Obviously, register values need to be saved somewhere to avoid incorrect results when switching threads

- Also, each thread has its own

- stack; current position given by stack pointer (SP)

- instruction pointer (IP) (program counter); where to execute next machine instruction

- Besides: priority, scheduling information, blocking events (if any)

- OS uses block of memory for housekeeping,

called thread control block (TCB)

- One for each thread

- Storing register contents, stack pointer, instruction pointer, …

- Arguments of

switchFromTo()are really (pointers to) TCBs

- One for each thread

5.4. Cooperative Multitasking

- Approach based on

switchFromTo()is cooperative- Thread A decides to yield CPU (voluntarily)

- A hands over to B

- Thread A decides to yield CPU (voluntarily)

- Disadvantages

- Inflexible: A and B are hard-coded

- No parallelism, just interleaved execution

- What if A contains a bug and enters an infinite loop?

- Advantages

- Programmed, so full control over when and where of switches

- Programmed, so usable even in restricted environments/OSs without support for multitasking/preemption

5.5. Preemptive Multitasking

- Preemption: OS removes thread forcefully (but only

temporarily) from CPU

- Housekeeping on stacks to allow seamless continuation later on similar to cooperative approach

- OS schedules different thread for execution afterwards

- Additional mechanism: Timer interrupts

- OS defines time slice (quantum), e.g., 30ms

- Interrupt fires every 30ms

- Interrupt handler invokes OS scheduler to determine next thread

- Details in upcoming presentation

- OS defines time slice (quantum), e.g., 30ms

5.6. Multitasking Overhead

- OS performs scheduling, which takes time

- Thread switching creates overhead

- Minor sources: Scheduling costs, saving and restoring state

- Major sources: Recall cache pollution

- After a context switch, the CPU’s cache quite likely misses

necessary data

- Necessary data needs to be fetched from RAM

- Accessing data in RAM takes hundreds of clock cycles

- After a context switch, the CPU’s cache quite likely misses

necessary data

6. Server Models

6.1. Server Models with Blocking I/O: Single-Threaded

Single thread (left-hand side of Figure 2.5; thought experiment, not for use)

![Figure 2.5 of cite:Hai19]()

“Figure 2.5 of [Hai19]” by Max Hailperin under CC BY-SA 3.0; converted from GitHub

- Sequential processing of client requests

- Long idle time during I/O

→ Not suitable in practice

- Long idle time during I/O

6.2. Server Models with Blocking I/O: Multi-Threaded

- One thread (or process) per client connection

- Parallel processing of client connections

- No idle time for I/O (switch to different client)

- Limited scalability (thousands or millions of clients?)

- Creation of threads causes overhead

- Each thread allocates resources

- OS needs to identify “correct” thread for incoming data

- Creation of threads causes overhead

- Worker Thread Pool as compromise

6.3. Server Models with Non-Blocking I/O

- Single thread

- Finite automaton to keep track of state per client

- State of automaton records state of interaction

- Complex code

- See Google’s experience mentioned in [B17]

- Avoids overhead of context switches

- Scalable (may be combined with Worker Thread Pool)

6.4. Worker Thread Pool

- Upon program start: Create set of worker threads

- Client requests received by dispatcher thread

- Requests recorded in to-do data structure

- Idle worker threads process requests

- Note

- Re-use of worker threads

- Limited resource usage

- How to tune for load?

- Dispatcher may be bottleneck

- If more client requests than worker threads, then potentially long delays

6.4.1. Aside: Virtual Threads in Java

- Beyond class topics

- Java 19 introduced virtual threads in preview API

- See JEP 436 or blog post for details

- Overhead reduced to enable thread per client model with

blocking I/O

- Map large number of virtual threads to pool of small number of OS threads

- When virtual thread blocks on I/O, Java runtime performs

non-blocking OS call and

- suspends virtual thread until it can be resumed later and

- executes different virtual thread on same OS thread

7. Conclusions

7.1. Summary

- Threads represent individual instruction execution sequences

- Multithreading improves

- Resource utilization

- Responsiveness

- Modular design in presence of concurrency

- Preemptive multithreading with housekeeping by OS

- Thread switching with overhead

- Design choices: I/O blocking or not, servers with multiple threads or not

Bibliography

- [B17] Barroso, Marty, Patterson & Ranganathan, Attack of the Killer Microseconds, CACM 60(4), 48-54 (2017). https://dl.acm.org/citation.cfm?id=3015146

- [Hai19] Hailperin, Operating Systems and Middleware – Supporting Controlled Interaction, revised edition 1.3.1, 2019. https://gustavus.edu/mcs/max/os-book/

License Information

This document is part of an Open Educational Resource (OER) course on Operating Systems. Source code and source files are available on GitLab under free licenses.

Except where otherwise noted, the work “OS03: Threads”, © 2017-2023 Jens Lechtenbörger, is published under the Creative Commons license CC BY-SA 4.0.

In particular, trademark rights are not licensed under this license. Thus, rights concerning third party logos (e.g., on the title slide) and other (trade-) marks (e.g., “Creative Commons” itself) remain with their respective holders.