OS07: MX Challenges

Based on Chapter 4 of [Hai19]

(Usage hints for this presentation)

This presentation is archived and will not receive further updates. Updated presentations on Operating Systems are available in the course IT Systems.

Computer Structures and Operating Systems 2023

Dr. Jens Lechtenbörger (License Information)

1. Introduction

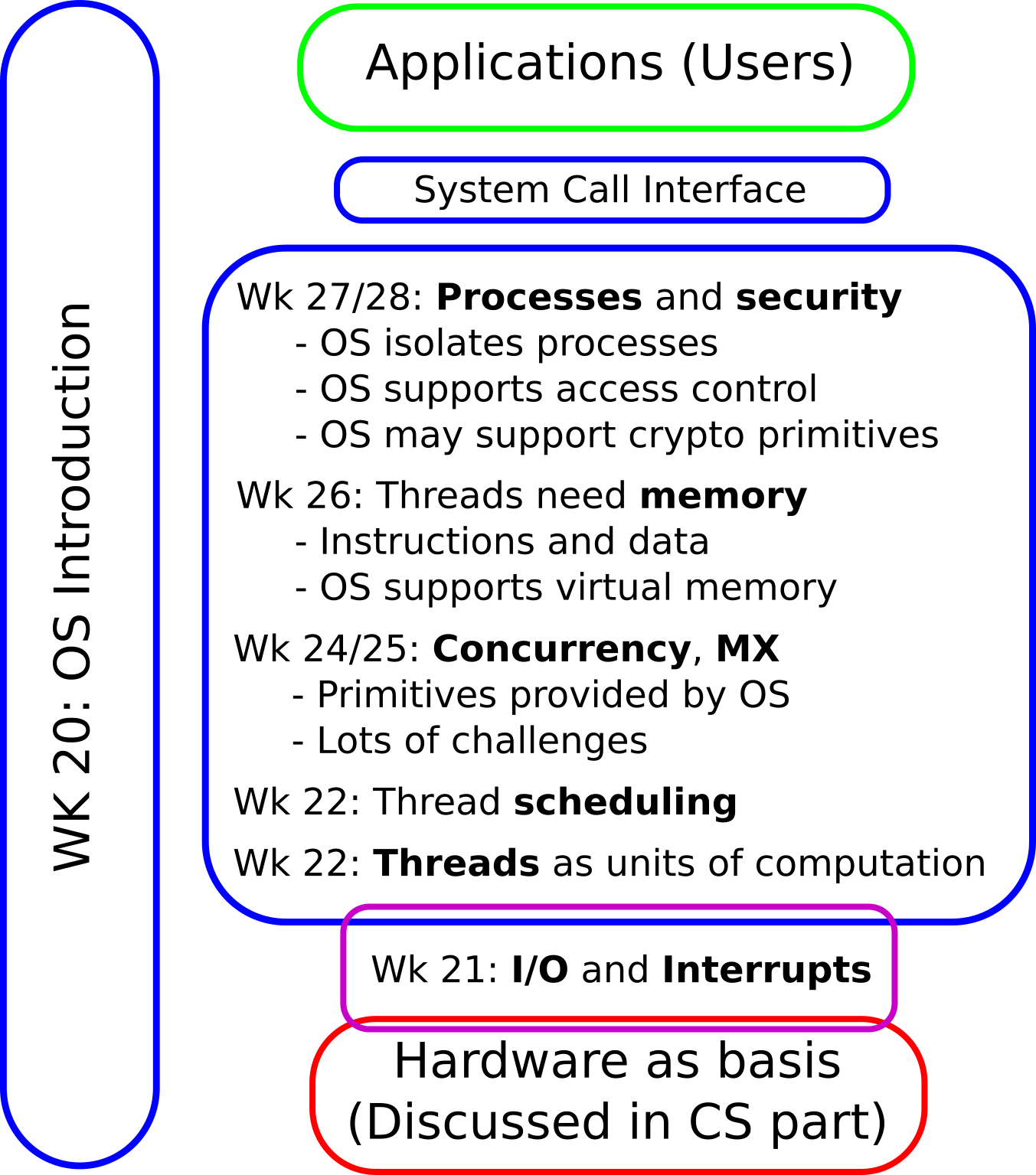

1.1. OS Plan

- OS Overview (Wk 20)

- OS Introduction (Wk 21)

- Interrupts and I/O (Wk 21)

- Threads (Wk 23)

- Thread Scheduling (Wk 24)

- Mutual Exclusion (MX) (Wk 25)

- MX in Java (Wk 25)

- MX Challenges (Wk 25)

- Virtual Memory I (Wk 26)

- Virtual Memory II (Wk 26)

- Processes (Wk 27)

- Security (Wk 28)

1.2. Today’s Core Question

- Am I fine if I lock all shared resources before use?

(Short answer: No. Issues such as priority inversion, deadlocks, starvation may arise.)

1.3. Learning Objectives

- Explain priority inversion and counter measures

- Explain and apply deadlock prevention and detection

- Explain convoys and starvation as MX challenges

1.4. Previously on OS …

- Mutexes may be based either on busy waiting (spinlocks) or on blocking (e.g., lock, mutex, semaphore, monitor)

- Threads may have different priorities

- Lower priority threads are preempted for those with higher priority (e.g., with round robin scheduling), which may lead to starvation

1.4.1. Threads, again

1.5. Different Learning Styles

- In previous years, some students reported that Section 4.8.1 (pp. 135 – 137) of [Hai19] on Priority Inversion is quite easy to understand, while they perceived that section in this presentation to be confusing.

- Note that [Hai19] discusses Priority Inversion resulting from locks/mutexes with blocking, while the slides also contain a variant with spinlocks.

Table of Contents

2. Priority Inversion

2.1. Priority Inversion with Spinlocks

- Example; single CPU core (visualization on next slide)

- Thread T0 with low priority enters CS

- T0 preempted by OS for T1 with high priority

- E.g., an important event occurs, to be handled by T1

- Note that T0 is still inside CS, holds lock

- T1 tries to enter same CS and spins on lock held by T0

- This is a variant of priority inversion

- High-priority thread T1 cannot continue due to actions by

low-priority thread

- If just one CPU core exists: Low-priority thread T0

cannot continue

- As CPU occupied by T1

- Deadlock (discussed subsequently)

- If multiple cores exist: Low-priority thread T0 runs although thread with higher priority does not make progress

- If just one CPU core exists: Low-priority thread T0

cannot continue

- High-priority thread T1 cannot continue due to actions by

low-priority thread

2.1.1. Single Core

See previous slide or notes for explanations

2.1.2. Two Cores

See earlier slide or notes for explanations

2.2. Priority Inversion with Blocking

- (Visualization on next slide)

- T0 with low,

TM with medium,

T1 with high priority

- T0 in CS

- An important event occurs, OS preempts T0 for T1

- T1 attempts entry into same CS, T1 gets blocked

- T0 could continue if no higher priority thread existed

- Another, less important, event occurs,

to be handled by TM

- Based on priority, OS favors TM over T0

- TM runs instead of more highly prioritized T1 → Priority

inversion

- (TM does unrelated work, without need to enter the CS)

- T0 cannot leave CS as long as TM exists

- With long running or many threads of medium priority, T1 (and important event) need to wait for a long time

2.2.1. Blocking CS

See previous slide for explanations

2.3. Priority Inversion Example

- Mars Pathfinder, 1997;

Wikipedia offers details

Robotic spacecraft named Pathfinder

![Sojourner Rover]()

“Sojourner Rover” by NASA under Public domain; from Wikimedia Commons

- With rover named Sojourner (shown to right)

- A “low-cost” mission at $280 million

- Bug (priority inversion) caused repeated resets

- “found in preflight testing but was deemed a glitch and therefore given a low priority as it only occurred in certain unanticipated heavy-load conditions”

- Priority inversion had been known for a long time

- E.g.: [LR80]

2.4. Priority Inversion Solutions

- Priority Inheritance (PI)

- Thread of low priority inherits priority of waiting thread

- E.g., PI-futex in Linux

- E.g., remote update for Mars Pathfinder

- Mutex of Pathfinder OS had flag to activate PI

- Initially, PI was off …

- Thread of low priority inherits priority of waiting thread

- Priority Ceiling (PC)

- Every resource has priority (new concept; so far only threads

had priorities)

- (Highest priority that “normal” threads can have) + 1

- Accessing thread runs with that priority

- Every resource has priority (new concept; so far only threads

had priorities)

- In both cases: Restore old priority after access

2.5. Self-Study and Exercise Tasks

This task is a variant of Exercise 4.10 of [Hai19]. Solve part (1) as self-study in Learnweb. Another variant as exercise task.

- Suppose a computer with only one processor runs a program that

creates three threads, which are assigned high,

medium, and low fixed priorities. (Assume that no other threads

are competing for the same processor.) The threads of high and

medium priority are currently blocked, waiting for different

events, while the thread with low priority is runnable. Some

threads share a single mutex (to protect shared resources that

are not shown).

Pseudocode for each of the threads is shown subsequently.

- Suppose that the mutex does not provide priority inheritance. How soon would you expect the program to terminate? Why?

- Suppose that the mutex provides priority inheritance. How soon would you expect the program to terminate? Why?

High-priority thread: “initially blocked; unblocked to handle event after 200 milliseconds” perform lock() on mutex run for 2 milliseconds on CPU perform unlock() on mutex terminate execution of the whole program Medium-priority thread: “initially blocked; unblocked to handle event after 200 milliseconds” run for 500 milliseconds on CPU Low-priority thread: “initially runnable” perform lock() on mutex perform I/O operation which leads to blocking for 600 milliseconds run for 3 milliseconds on CPU perform unlock() on mutex

- Suppose a computer with only one processor runs a program that

creates three threads, which are assigned high,

medium, and low fixed priorities. (Assume that no other threads

are competing for the same processor.) The threads of high and

medium priority are currently blocked, waiting for different

events, while the thread with low priority is runnable. Some

threads share a single mutex (to protect shared resources that

are not shown).

Pseudocode for each of the threads is shown subsequently.

3. Deadlocks

3.1. Deadlock

Permanent blocking of thread set

“Gridlock” by Interiot~commonswiki and Jeanacoa under CC BY-SA 2.5 Generic; from Wikimedia Commons

- Reason

- Cyclic waiting for resources/locks/messages of other threads

- (Formal definition on later slide)

- Reason

- No generally accepted solution

- Deadlocks can be perceived as programming bugs

- Dealing with deadlocks causes overhead

- Acceptable to deal with (hopefully rare) bugs?

- Dealing with deadlocks causes overhead

- Solutions depend on

- Properties of resources (e.g., linearly ordered ones)

- Properties of threads (transactions?)

- Deadlocks can be perceived as programming bugs

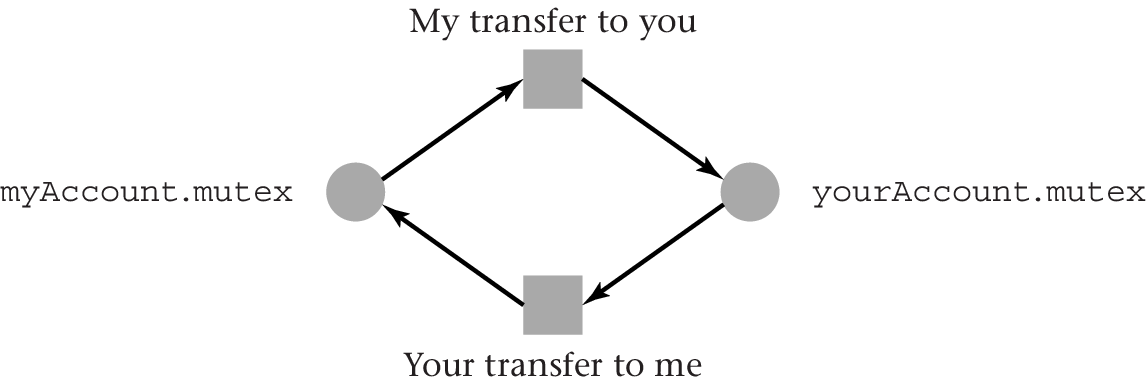

3.2. Deadlock Example

- Money transfers between bank accounts

- Transfer from

myAccounttoyourAccountby thread 1; transfer in other direction by thread 2

- Transfer from

- Race conditions on account balances

- Need mutex per account

- Lock both accounts involved in transfer. What order?

- “Natural” lock order: First, lock source account; then, lock

destination account

- Thread 1 locks

myAccount, while thread 2 locksyourAccount- Each thread gets blocked once it attempts to acquire the

second lock

- Neither can continue

- Deadlock

- Each thread gets blocked once it attempts to acquire the

second lock

- Thread 1 locks

3.3. Defining Conditions for Deadlocks

Deadlock if and only if (1) – (4) hold [CES71]:

- Mutual exclusion

- Exclusive resource usage

- Hold and wait

- Threads hold some resources while waiting for others

- No preemption

- OS does not forcibly remove allocated resources

- Circular wait

- Circular chain of threads such that each thread holds resources that are requested by next thread in chain

3.4. Resource Allocation Graphs

- Representation and visualization of resource allocation as

directed graph

- (Necessary prior knowledge: directed graphs and cycles in such graphs)

- Nodes

- Threads (squares on next slide)

- Resources (circles on next slide)

- Edges

- From thread T to resource R if T is waiting for R

- From resource R to thread T if R is allocated to T

- Example on next slide

- Fact: System in deadlock if and only if graph contains cycle

3.5. Resource Allocation Graph Example

Visualization of deadlock: cyclic resource allocation graph for previous example

(Note: Choice of shapes is arbitrary; just for visualization purposes)

4. Deadlock Strategies

4.1. Deadlock Strategies

- (Ostrich algorithm)

- Deadlock Prevention

- Deadlock Avoidance

- Deadlock Detection

These strategies are covered in subsequent slides.

4.2. Ostrich “Algorithm”

- A joke about missing deadlock handling

- “Implemented” in most systems

- Pretend nothing special is happening

- (E.g., Java VMs act like ostriches)

- Reasoning

- Proper deadlock handling is complex

- Deadlocks are rare, result from buggy programs

- “Implemented” in most systems

(Refresh HTML presentation for other drawings)

4.3. Deadlock Prevention

- Prevent a defining condition for deadlocks from becoming true

Practical options

- Prevent condition (2), “hold and wait”: Request all necessary

resources at once

- Only possible in special cases, e.g., conservative/static 2PL in DBMS

- Threads either have no incoming or no outgoing edges in resource allocation graph → Cycles cannot occur

- Prevent condition (4), “circular wait”: Number resources,

request resources according to linear resource ordering

- Consider resources Rh and Rk with h < k

- Threads that need both resources must lock Rh first

- Threads that already requested Rk do not request Rh afterwards

- Requests for resources in ascending order → Cycles cannot occur

- Consider resources Rh and Rk with h < k

- Prevent condition (2), “hold and wait”: Request all necessary

resources at once

4.3.1. Linear Resource Ordering Example

- Money transfers between bank accounts revisited

- Locks acquired in order of account numbers

- A programming contract, not known by OS

- Suppose

myAccounthas number 42,yourAccountis 4711- Both threads try to lock

myAccountfirst (as 42 < 4711)- Only one succeeds, can also lock

yourAccount - The other thread gets blocked

- Only one succeeds, can also lock

- Both threads try to lock

- No deadlock

- (See Fig 4.21 in [Hai19] for an example of linear ordering in the context of the Linux scheduler)

4.4. Deadlock Avoidance

- Dynamic decision whether allocation may lead to deadlock

- If a deadlock cannot be ruled out easily: Do not perform that allocation but block the requesting thread (or return error code or raise exception)

- Consequently, deadlocks do never occur; they are avoided

- Classical technique

- Banker’s algorithm by Dijkstra

- Deny incremental allocation if “unsafe” state would arise

- Not used in practice

- Resources and threads’ requirements need to be declared ahead of time

- Banker’s algorithm by Dijkstra

4.5. Deadlock Detection

- Idea

- Let deadlocks happen

- Detect deadlocks, e.g., via cycle-check on resource allocation graph

- Periodically or

- After “unreasonably long” waiting time for lock or

- Immediately when thread tries to acquire a locked mutex

- Resolve deadlocks: typically, terminate some thread(s)

- Prerequisite to build graph

- Mutex records by which thread it is locked (if any)

- OS records for what mutex a thread is waiting

5. Further Challenges

5.1. Convoy Problem

- Suppose a central shared resource R exists

- Frequently accessed by lots of threads, protected by mutex M

- Preemption of thread T1 holding that mutex is likely

- Other threads wind up in wait queue of mutex, the convoy

- Thread switches without much progress

- (See [BGM+79] for origin of “convoy” in context of database transactions)

- Other threads wind up in wait queue of mutex, the convoy

- Suppose T1 continues

- T1 releases lock, which is reassigned to T2

- During its time slice, T1 wants R again, but M is now held by T2

- T1 gets blocked without much progress

- The same happens to the other threads

- The convoy persists for a long time

5.1.1. A Convoy Solution

- Change mutex behavior

- Proposed in [BGM+79]

- Do no immediately reassign mutex upon

unlock() - Instead, make all waiting threads runnable

- Without reassigning mutex

- (In addition, for performance reasons in case of failing locking attempt [BGM+79] suggests “to spin for a few instructions in the hope that the lock will become free”)

- Effect: T1 can

lock()M repeatedly during its time slice

5.2. Starvation

- A thread starves if its resource requests are repeatedly denied

- Examples in previous presentations

- Interrupt livelock

- Thread with low priority in presence of high priority threads

- Thread which cannot enter CS

- Famous illustration: Dining philosophers (next slide)

- No simple solutions

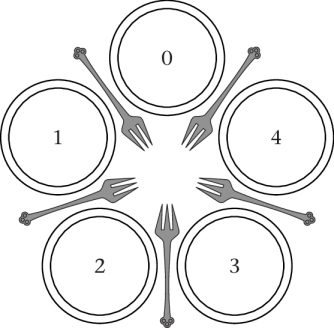

5.2.1. Dining Philosophers

- MX problem proposed by Dijkstra

- Philosophers sit in circle; eat and think repeatedly

- Two forks required for eating

- MX for forks

- Two forks required for eating

Dining Philosophers

“Figure 4.20 of [Hai17]” by Max Hailperin under CC BY-SA 3.0; converted from GitHub

5.2.2. Starving Philosophers

Starvation of P0

![Figure 4.20 of cite:Hai17]()

“Figure 4.20 of [Hai17]” by Max Hailperin under CC BY-SA 3.0; converted from GitHub

- P1 and P3 or P2 and P4 eat in parallel

- Then they wake the other pair

- P1 wakes P2; P3 wakes P4

- P2 wakes P1; P4 wakes P3

- Iterate

6. Conclusions

6.1. Summary

- MX to avoid race conditions

- Challenges

- Priority inversion

- Deadlocks

- Convoys

- Starvation

Bibliography

- [BGM+79] Blasgen, Gray, Mitoma & Price, The Convoy Phenomenon, SIGOPS Oper. Syst. Rev. 13(2), 20-25 (1979). https://doi.org/10.1145/850657.850659

- [CES71] Coffman, Elphick & Shoshani, System Deadlocks, ACM Comput. Surv. 3(2), 67-78 (1971). https://dl.acm.org/citation.cfm?doid=356586.356588.356588

- [Hai19] Hailperin, Operating Systems and Middleware – Supporting Controlled Interaction, revised edition 1.3.1, 2019. https://gustavus.edu/mcs/max/os-book/

- [LR80] Lampson & Redell, Experience with Processes and Monitors in Mesa, Commun. ACM 23(2), 105-117 (1980). https://dl.acm.org/citation.cfm?doid=358818.358824

- [Sta01] Stallings, Operating Systems: Internals and Design Principles, Prentice Hall, 2001.

- [Tan01] Tanenbaum, Modern Operating Systems, Prentice-Hall, 2001.

License Information

This document is part of an Open Educational Resource (OER) course on Operating Systems. Source code and source files are available on GitLab under free licenses.

Except where otherwise noted, the work “OS07: MX Challenges”, © 2017-2023 Jens Lechtenbörger, is published under the Creative Commons license CC BY-SA 4.0.

In particular, trademark rights are not licensed under this license. Thus, rights concerning third party logos (e.g., on the title slide) and other (trade-) marks (e.g., “Creative Commons” itself) remain with their respective holders.