OS09: Virtual Memory II

Based on Chapter 6 of [Hai19]

(Usage hints for this presentation)

This presentation is archived and will not receive further updates. Updated presentations on Operating Systems are available in the course IT Systems.

Computer Structures and Operating Systems 2023

Dr. Jens Lechtenbörger (License Information)

1. Introduction

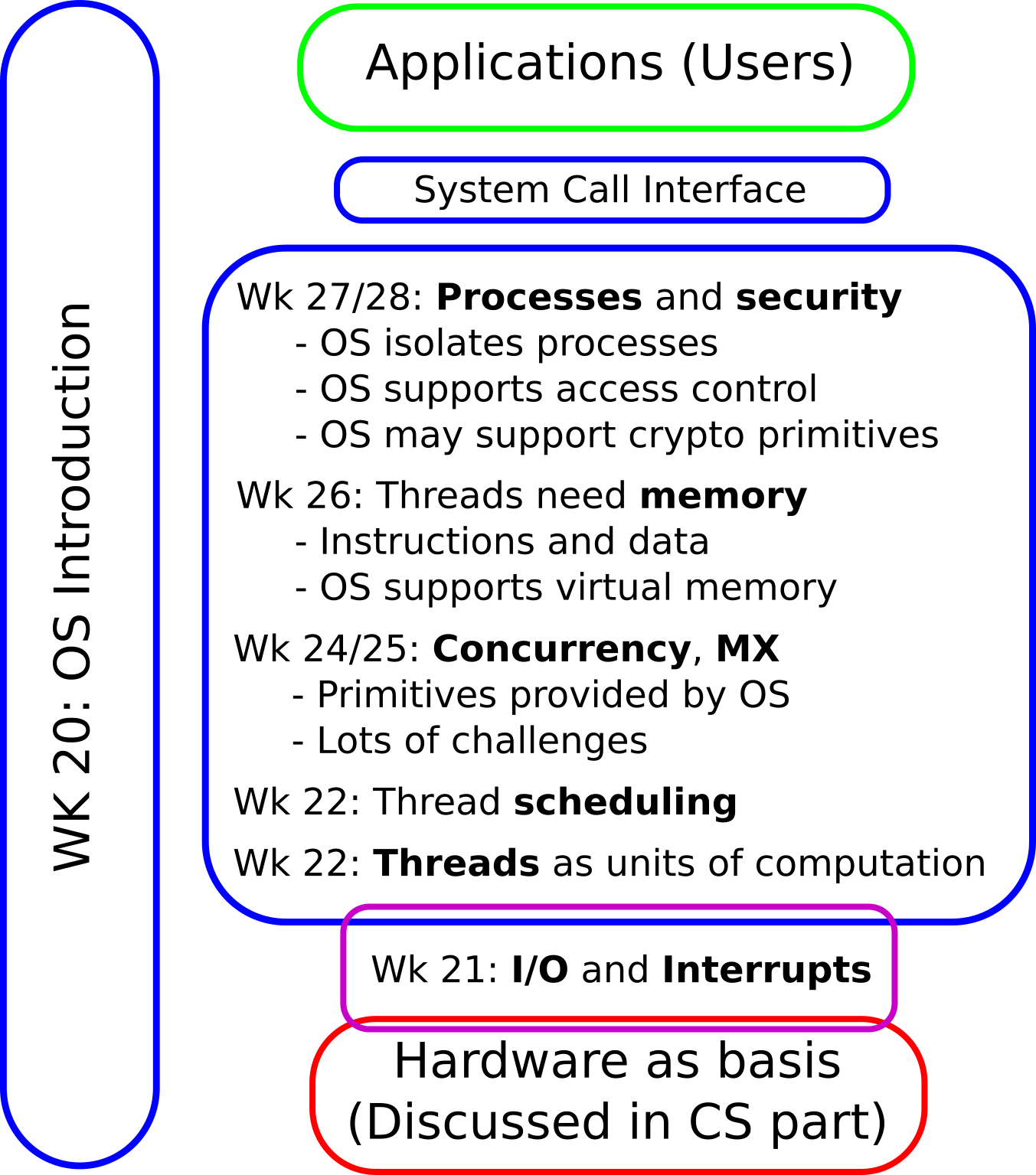

1.1. OS Plan

- OS Overview (Wk 20)

- OS Introduction (Wk 21)

- Interrupts and I/O (Wk 21)

- Threads (Wk 23)

- Thread Scheduling (Wk 24)

- Mutual Exclusion (MX) (Wk 25)

- MX in Java (Wk 25)

- MX Challenges (Wk 25)

- Virtual Memory I (Wk 26)

- Virtual Memory II (Wk 26)

1.2. Today’s Core Questions

- How can the size of page tables be reduced?

- How can address translation be sped up?

- How does the OS allocate frames to processes?

1.3. Learning Objectives

- Explain paging, swapping, and thrashing

- Discuss differences of different types of page tables

- Explain role of TLB in address translation

- Apply page replacement with FIFO, LRU, Clock

1.4. Retrieval Practice

1.4.1. Recall: Hash Tables

- Hash table = data

structure with search in O(1) on average

- Taught in Data Structures and Algorithms

- What are hash collisions, buckets, chaining?

1.4.2. Previously on OS …

- What is a virtual address, how is it related to page tables?

- What piece of hardware is responsible for address translation?

- How large are page tables? How many exist?

- What happens upon page misses?

- What is demand loading?

- The size of page tables poses a challenge.

1.4.3. Selected Questions

Table of Contents

2. Multilevel Page Tables

2.1. Core Idea

- So far: Virtual address is hierarchical object consisting of page number and offset

- Now multilevel page tables: Interpret page table as tree with

fixed depth, i.e., a fixed number of multiple levels

- (Visualizations on next two slides)

- For n levels, split page number into n smaller parts

- Two-level for 32 bits: Split 20 bits into two parts with 10 bits each

- To traverse page table (tree), use one part on each level

- Aside: On 64-bit machines, Linux introduced 5-level tables as default on 2019-09-16

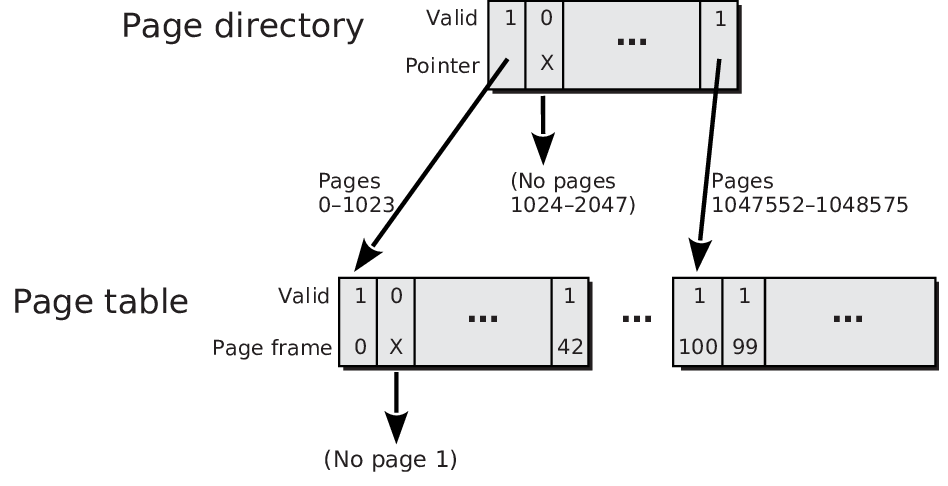

2.2. Two-Level Page Table

“IA-32 two-level page table” by Jens Lechtenbörger under CC BY-SA 4.0; Frame numbers and valid bits added to and third layer removed from Figure 6.13 of [Hai17] by Max Hailperin under CC BY-SA 3.0. Source at GitLab.

Note: Page table contains entries of an ordinary page table. Previously, valid bit and page frame numbers were shown in columns; here, they are shown in rows.

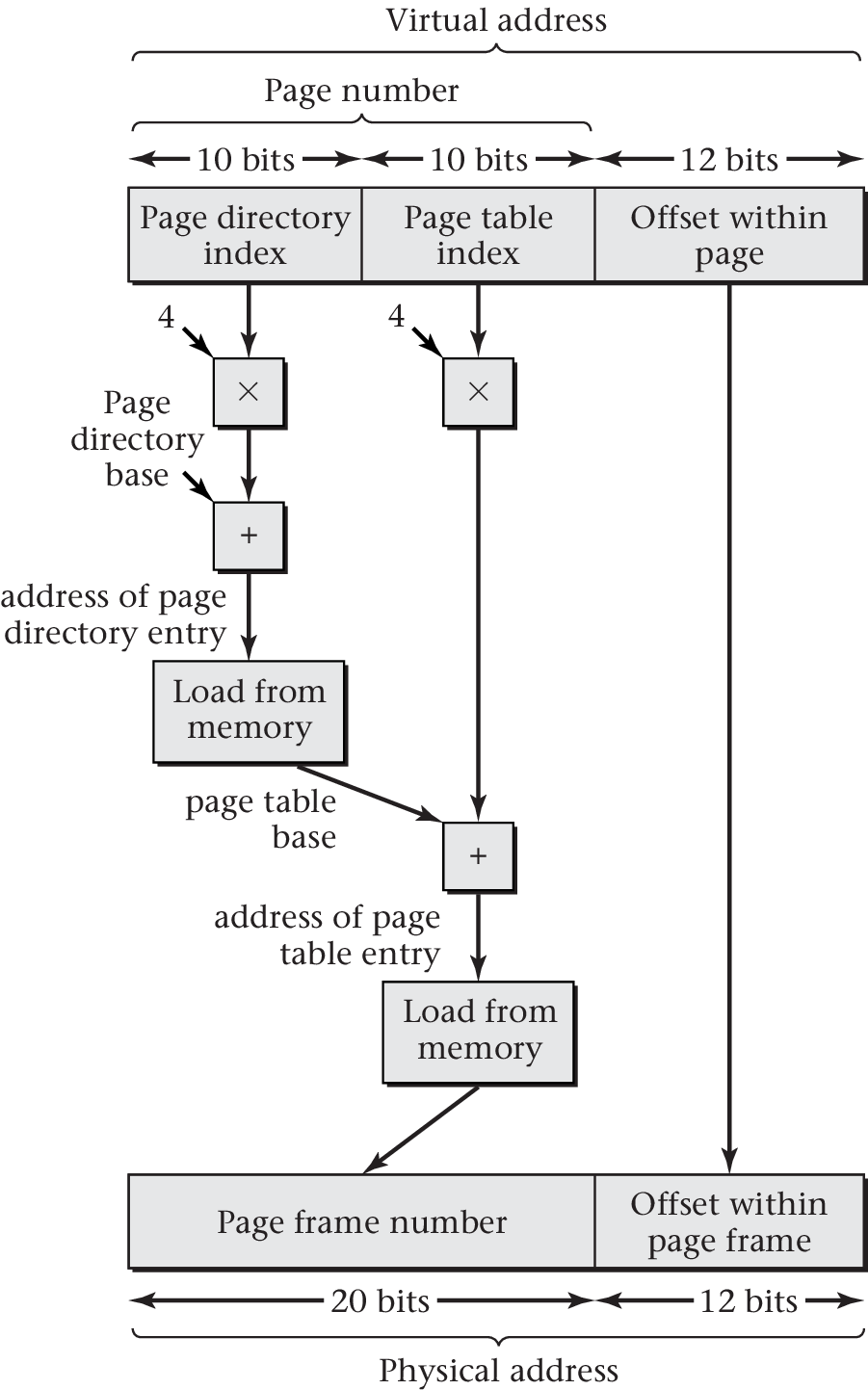

2.2.1. Two-Level Address Translation

“Figure 6.14 of [Hai17]” by Max Hailperin under CC BY-SA 3.0; converted from GitHub

3. Inverted Page Tables and Hardware Support

3.1. Inverted Page Tables

- Recall: Page tables can be huge, per process

- Key insights to reduce amount of memory:

- Number of frames is (usually) much smaller than aggregate number of pages

- Thus, let us record information per frame, not per page and process

- (For each frame, what page of what process is currently contained?)

- Obtain frame for page via hashing of page number

- PowerPC, UltraSPARC, IA-64

3.1.1. Example

Simplistic example, 4 frames, hashing via

modulo 4![Figure 6.10 of cite:Hai17]()

“Figure 6.10 of [Hai17]” by Max Hailperin under CC BY-SA 3.0; converted from GitHub

- (Inverted page table below based on Fig. 6.15 of [Hai19]; represents main memory situation shown to the right)

- E.g., page 0: 0 mod 4 = 0; thus look into row 0, find that page 0 is contained in frame 1

| Valid | Page | Process | Frame |

|---|---|---|---|

| 1 | 0 | 42 | 1 |

| 1 | 1 | 42 | 0 |

| 1 | 6 | 42 | 3 |

| 0 | X | X | X |

3.1.2. Observations

- Constant table size

- Proportional to main memory size

- Independent of number of processes

- One entry per frame is sufficient

- Entries are large

- Page numbers included (hash collisions)

- Process IDs included (hash collisions)

- Pointers for overflow handling necessary (not shown above)

- If there is one entry per frame, the frame number does not need to be included (implicit as entry’s number)

- Side note: Efficient use in practice is hard

- See comments by Linus Torvalds if you are interested

3.2. Hardware Support for Address Translations

- Lots of architectures support page tables in hardware

- Multilevel and/or inverted page tables

- Page table walker does translation in hardware

- Architecture specifies page table structure

- For multilevel page tables, special register stores start address of page directory

- Architecture specifies page table structure

- Special cache to reduce translation latency, the TLB (next slide)

3.2.1. Translation Lookaside Buffer (TLB)

- Access to virtual address may require several memory accesses

→ Overhead

- Access to page table (one per level)

- Access to data

- Improvement: Caching

- Special cache, called TLB, for page table entries

- Recently used translations of page numbers to frame numbers

- MMU searches in TLB first to build physical address

- Note: Search for page, not entire virtual address

- If not found (TLB miss): Page table access

- Note: Context switch may require TLB flush → Overhead

- Reduced when entries have address space identifier (ASID)

- See [Hai19] if you are interested in details

- Reduced when entries have address space identifier (ASID)

- Special cache, called TLB, for page table entries

4. Policies

4.1. Terminology

- To page = to load a page of data into RAM

- Managed by OS

- Paging causes swapping and may lead to thrashing as discussed next

- Paging policies, to be discussed afterwards, aim to reduce both phenomena

4.1.1. Swapping

- Under-specified term

- Either (desktop OSs)

- Usual paging in case of page faults

- Page replacement: Swap one page out of frame to disk, another one in

- Discussed subsequently

- Page replacement: Swap one page out of frame to disk, another one in

- Usual paging in case of page faults

- Or (e.g., mainframe OSs)

- Swap out entire process (all of its pages and all of its threads)

- New state for its threads: swapped/suspended

- No thread can run as nothing resides in RAM

- New state for its threads: swapped/suspended

- Swap in later, make process/threads runnable again

- (Not considered subsequently)

- Swap out entire process (all of its pages and all of its threads)

4.1.2. Thrashing

- Permanent swapping without progress

- Another type of livelock

- Time wasted with overhead of swapping and context switching

- Typical situation: no free frames

- Page faults are frequent

- OS blocks thread, performs page replacement via swapping

- After context switch to different thread, again page fault

- More swapping

- Page faults are frequent

- Reason: Too many processes/threads

- Mainframe OSs may swap out entire processes then

- Control so-called multiprogramming level (MPL)

- Enforce upper bound on number of active processes

- Control so-called multiprogramming level (MPL)

- Desktop OSs let users deal with this

- Mainframe OSs may swap out entire processes then

4.2. Fetch Policy

- General question: When to bring pages into RAM?

- Popular alternatives

- Demand paging (contrast with demand loading)

- Only load page upon page fault

- Efficient use of RAM at cost of lots of page faults

- Prepaging

- Bring several pages into RAM, anticipate future use

- If future use guessed correctly, fewer page faults result

- Also, loading a random hard disk page into RAM involves rotational delay

- Such delays are reduced when neighboring pages are read in one operation

- (Even for SSDs, multiple random I/O operations are slower than a single sequential I/O operation of the same size as each operation comes with overhead)

- Demand paging (contrast with demand loading)

4.2.1. Prepaging ideas

- Clustered paging, read around

- Do not read just one page but a cluster of neighboring pages

- Can be turned on or off in system calls

- Do not read just one page but a cluster of neighboring pages

- OS and program start

- OSs may monitor page faults, record and use them upon next start

to pre-load necessary data

- Linux with readahead system call

- Windows with Prefetching and SuperFetch

- OSs may monitor page faults, record and use them upon next start

to pre-load necessary data

4.3. Replacement Policy

- What frame to re-use when a page fault occurs while all frames are full?

- Recall goal: Keep working sets in RAM

- Local vs global replacement

- Local: Replace within frames of same process

- When to in- or decrease resident set size?

- Global: Replace among all frames

- Local: Replace within frames of same process

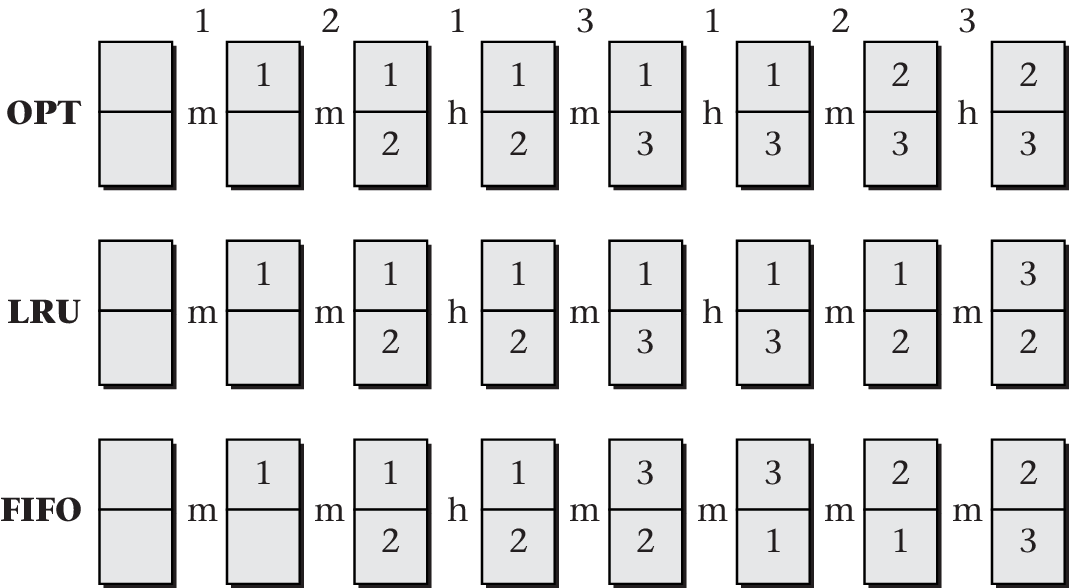

4.3.1. Sample Replacement Policies

- OPT: Hypothetical optimal replacement

- Replace page that has its next use furthest in the future

- Needs knowledge about future, which is unrealistic

- Replace page that has its next use furthest in the future

- FIFO: First In, First Out replacement

- Replace oldest page first

- Independent of number/distribution of references

- Replace oldest page first

- LRU: Least Recently Used replacement

- Replace page that has gone the longest without being accessed

- Based on principle of locality, upcoming access unlikely

- Replace page that has gone the longest without being accessed

- Clock (second chance)

- Replace “unused” page

- Use 1 bit to keep track of “recent” use

- Replace “unused” page

4.3.2. Replacement Examples

“Figure 6.19 of [Hai17]” by Max Hailperin under CC BY-SA 3.0; converted from GitHub

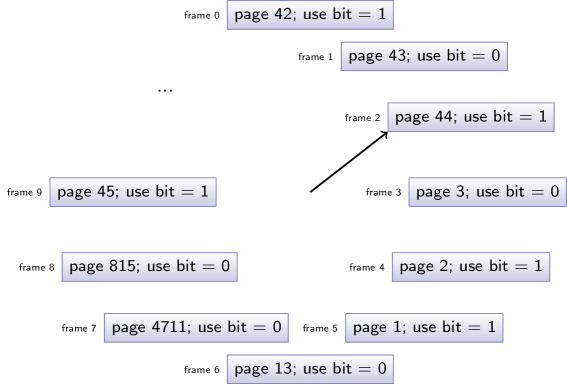

4.3.3. Clock (Second Chance)

Frames arranged in cycle, pointer to next frame

![Clock algorithm for page replacement]()

- (Naming: Pointer as hand of clock)

- Pointer “wraps around” from “last” frame to “first” one

- Use-bit per frame

- Set to 1 when page referenced/used

4.3.4. Beware of the Use Bit

- Use-bit may be part of hardware support

- Use-bit set to 0 when page swapped in

- Under demand paging, use-bit immediately set to 1 due to reference

- Following examples assume that page is referenced for use

- Thus, use-bit is 1 for new pages

- Under prepaging, use-bit may stay 0

4.3.5. Clock (Second Chance): Algorithm

- If page hit

- Set use-bit to 1

- Keep pointer unchanged

- If page fault

- Check frame at pointer

- If free, use immediately, advance pointer

- Otherwise

- If use-bit is 0, then replace; advance pointer

- If use-bit is 1, reset bit to 0, advance pointer, repeat (Go to “Check frame at pointer”)

- (Naming: In contrast to FIFO, page gets a second chance)

4.3.6. Clock (Second Chance): Animation

- Consider reference of page 7 in previous situation

- All frames full, page 7 not present in RAM

Page fault

![Animation of Clock algorithm for page replacement]()

- Frame at pointer is 2, page 44 has use bit of 1

- Reset use bit to 0, advance pointer to frame 3

- Frame at pointer is 3, page 3 has use bit of 0

- Replace page 3 with 7, set use bit to 1 due to reference

- Advance pointer to frame 4

- Frame at pointer is 2, page 44 has use bit of 1

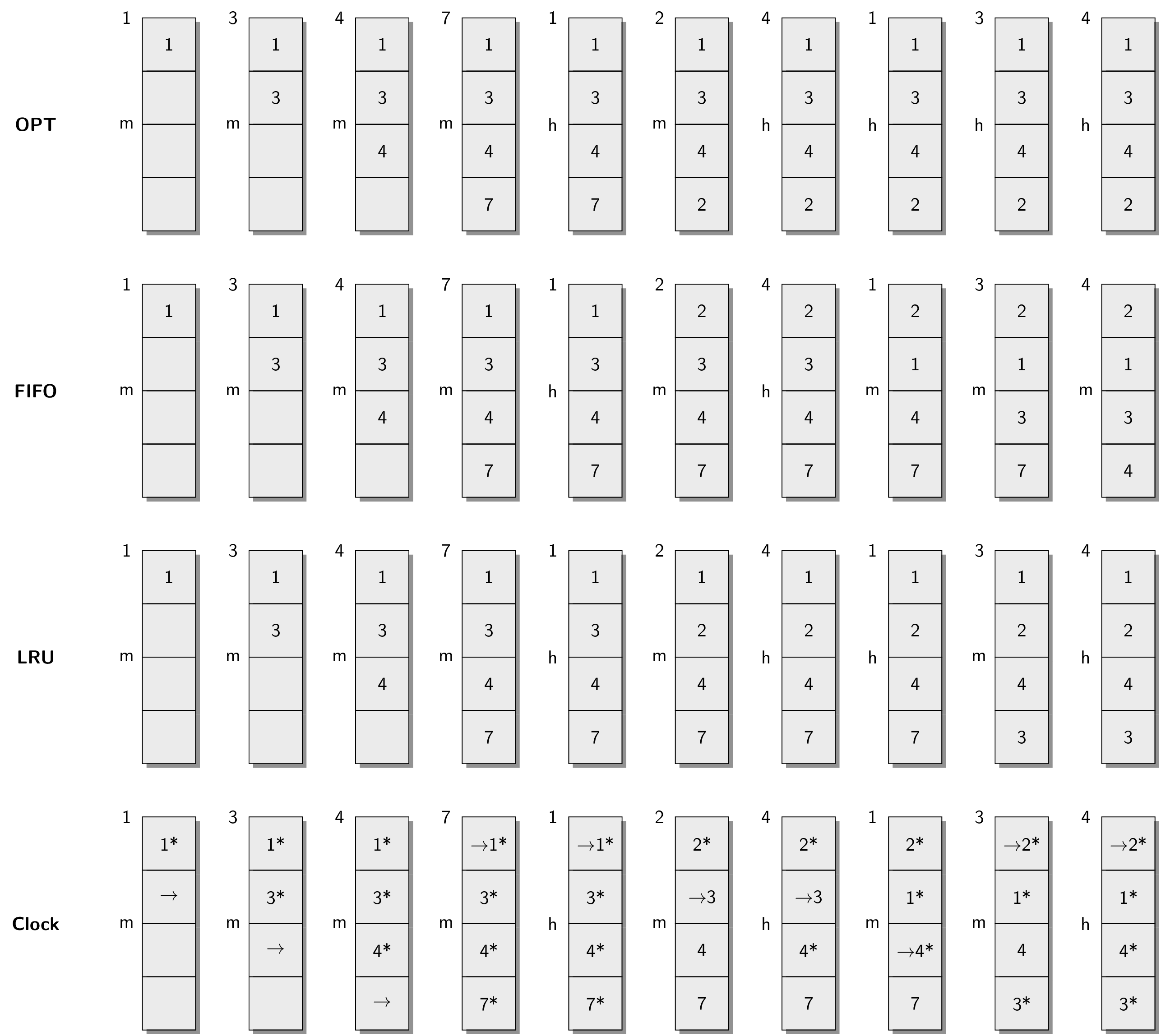

4.3.7. Clock: Different Animation

- Situation

- Four frames of main memory, initially empty

- Page references: 1, 3, 4, 7, 1, 2, 4, 1, 3, 4

4.3.8. More Replacement Examples

4.4. Self-Study Task

Perform the following task in Learnweb.

Apply the page replacement algorithms OPT, FIFO, LRU, and Clock (Second Chance) for four frames of main memory to the following stream of page references under demand paging: 1, 3, 4, 7, 1, 2, 4, 1, 3, 4

Verify your results against the previous slide and raise any questions that you may have.

5. Conclusions

5.1. Summary

- Virtual memory provides abstraction over RAM and secondary storage

- Paging as fundamental mechanism for flexibility and isolation

- Page tables managed by OS

- Hardware support via MMU with TLB

- Management of “necessary” pages is complex

- Tasks include prepaging and page replacement

Bibliography

- [Hai19] Hailperin, Operating Systems and Middleware – Supporting Controlled Interaction, revised edition 1.3.1, 2019. https://gustavus.edu/mcs/max/os-book/

License Information

This document is part of an Open Educational Resource (OER) course on Operating Systems. Source code and source files are available on GitLab under free licenses.

Except where otherwise noted, the work “OS09: Virtual Memory II”, © 2017-2023 Jens Lechtenbörger, is published under the Creative Commons license CC BY-SA 4.0.

In particular, trademark rights are not licensed under this license. Thus, rights concerning third party logos (e.g., on the title slide) and other (trade-) marks (e.g., “Creative Commons” itself) remain with their respective holders.