Virtual Memory II

(Usage hints for this presentation)

IT Systems, Summer Term 2026

Dr.-Ing. Matthes Elstermann

1. Introduction

1.1. Retrieval Practice

1.2. Terminology

- To page = to load a page of data into RAM

- Managed by OS

- Paging may cause swapping and may lead to thrashing as discussed next

- Paging policies, to be discussed afterwards, aim to reduce both phenomena

1.2.1. Swapping

Under-specified term

- Either (desktop OSs)

- Usual paging in case of page faults

- Page replacement: Swap one page out of frame to disk, another one in

- Discussed subsequently

- Usual paging in case of page faults

- Or (e.g., mainframe OSs, not considered subsequently)

- Swap out entire process (all of its pages and all of its threads)

- New state for its threads: swapped/suspended

- No thread can run as nothing resides in RAM

- Control so-called multiprogramming level (upper bound on number of active processes)

- Swap in later, make process/threads runnable again

- Swap out entire process (all of its pages and all of its threads)

- Either (desktop OSs)

1.2.2. Thrashing

- Permanent swapping without progress

- Another type of livelock

- Time wasted with overhead of swapping and context switching

- Situation: Too many processes/threads, too few free frames

- Page faults are frequent

- OS blocks thread, performs page replacement via swapping

- After context switch to different thread, again page fault

- More swapping

- Page faults are frequent

Agenda

- Part 1

- Part 2

2. Fetching Pages

2.1. Files and Virtual Memory

- Data kept persistently in files on secondary storage

When (thread of) process opens file, file can be mapped into virtual address space

- Initially without loading data into RAM

- E.g.,

page 2in big picture

- E.g.,

Page accesses in that file trigger page faults

- Handled by OS by loading those pages into RAM

- Marked read-only and clean

- Handled by OS by loading those pages into RAM

Upon write, MMU triggers interrupt, OS makes page writable and remembers it as dirty (changed from clean)

- Typically with MMU hardware support via dirty bit in page table

- Dirty = to be written to secondary storage at some point in time

- After being written, marked as clean and read-only

- Initially without loading data into RAM

2.2. Fetch Policy

- General question: When to bring pages into RAM?

Popular alternatives

Demand paging

- Only load page upon page fault

- Efficient use of RAM at cost of lots of page faults

Prepaging

- Bring several pages into RAM, anticipate future use

- If future use guessed correctly, fewer page faults result

- Also, loading a random hard disk page into RAM involves rotational delay

- Such delays are reduced when neighboring pages are read in one operation

- (Even for SSDs, multiple random I/O operations are slower than a single sequential I/O operation of the same size as each operation comes with overhead)

2.2.1. Prepaging ideas

- Clustered paging, read around

- Do not read just one page but a cluster of neighboring pages

- Can be turned on or off in system calls

- Do not read just one page but a cluster of neighboring pages

- OS and program start

- OSs may monitor page faults, record and use them upon next start

to preload necessary data

- Linux with readahead system call

- Windows with Prefetching and SuperFetch

- OSs may monitor page faults, record and use them upon next start

to preload necessary data

2.2.2. Working Set

- OS loads part of program into main memory

- Resident set: Pages currently in main memory

At least current instruction (and required data) necessary in main memory

Principle of locality

- Memory references typically close to each other

- Few pages sufficient for some interval

- Working set: Necessary pages for some interval

- Aim: Keep working set in resident set

- Replacement policies on subsequent slides

- Aim: Keep working set in resident set

2.2.3. Beyond Learning Objectives: Datacenter Memory

- Main memory management at Meta: (Maruf et al. 2023)

- Modern memory is organized in tiers with different

characteristics (e.g., cost, size, bandwidth, latency)

- E.g.: DRAM, NVM, low-power DRAM

- Accessible via CXL

- Estimate page temperature as criterion for transparent page

placement (TPP) in specific tier

- Page is hot if reuse is likely within 2 minutes, warm for reuse within 10 minutes, cold otherwise

- Idea: Move pages between faster and slower tiers based on temperature

- Sample hardware counters (e.g., cache misses) to estimate temperature

- Integrated into Linux kernel

- Modern memory is organized in tiers with different

characteristics (e.g., cost, size, bandwidth, latency)

3. Replacing Pages

3.1. Sample Replacement Policies

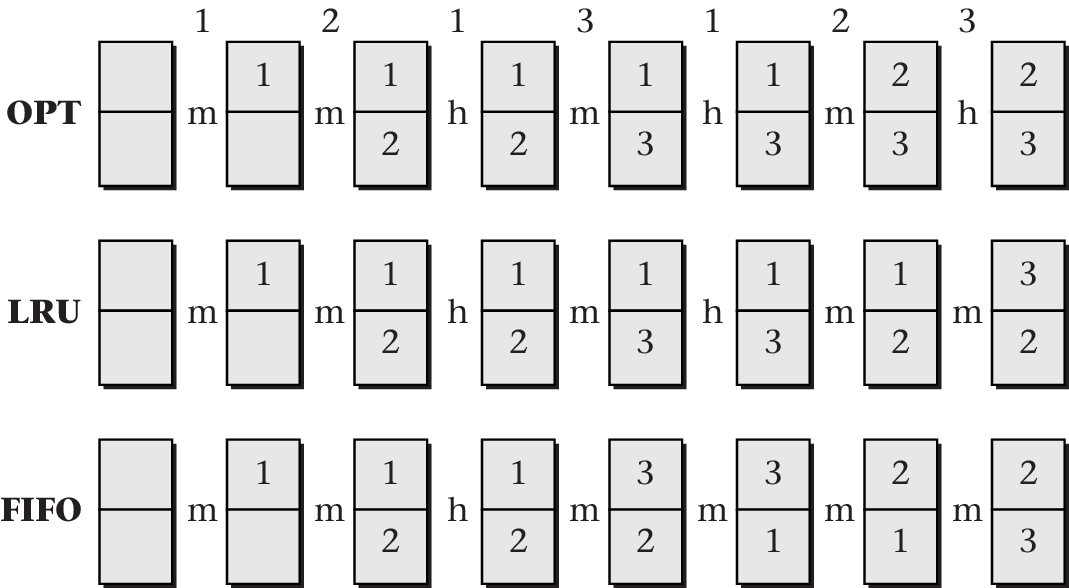

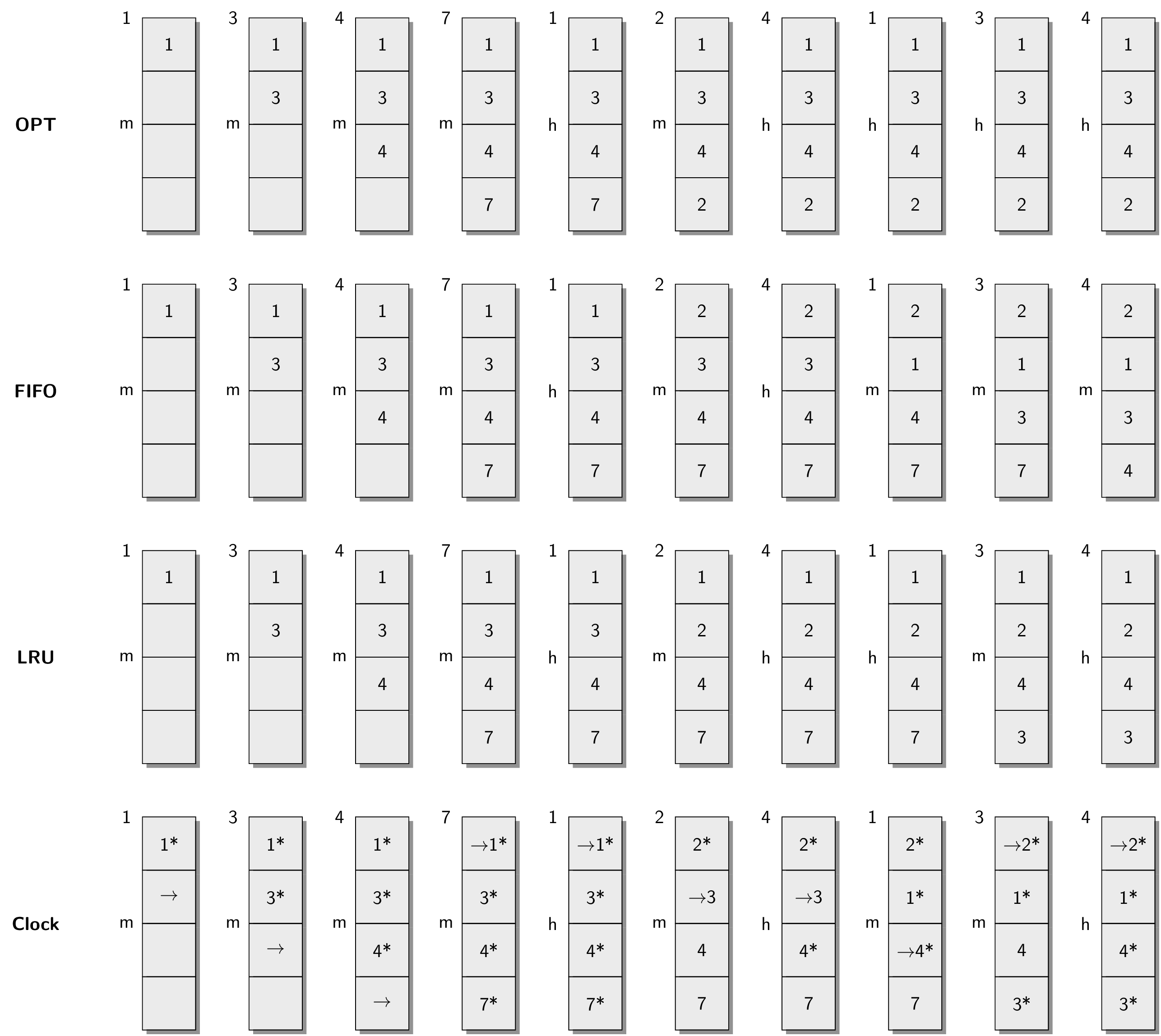

OPT: Hypothetical optimal replacement

- Replace page that has its next use furthest in the future

- Needs knowledge about future, which is unrealistic

- Replace page that has its next use furthest in the future

FIFO: First In, First Out replacement

- Replace oldest page first

- Independent of number/distribution of page references

- Replace oldest page first

LRU: Least Recently Used replacement

- Replace page that has gone the longest without being accessed

- Based on principle of locality, upcoming access unlikely

- Replace page that has gone the longest without being accessed

- Clock (second chance)

- Replace “unused” page

- Use 1 bit to keep track of “recent” use

- Replace “unused” page

3.2. Replacement Examples

“Figure 6.19 of (Hailperin 2019)” by Max Hailperin under CC BY-SA 3.0; converted from GitHub

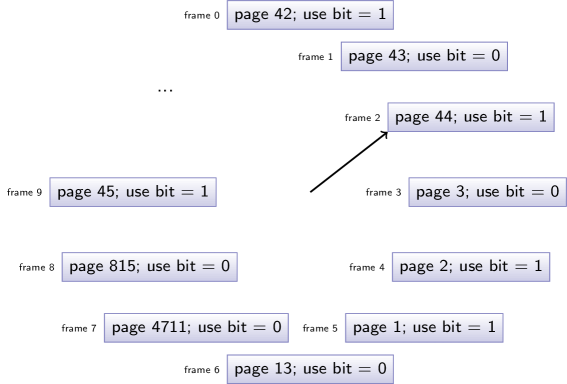

3.3. Clock (Second Chance)

Frames arranged in cycle, pointer to next frame

![Clock algorithm for page replacement]()

- (Naming: Pointer as hand of clock)

- Pointer “wraps around” from “last” frame to “first” one

- Use-bit per frame

- Set to 1 when page referenced/used

3.3.1. Beware of the Use Bit

- Use-bit may be part of hardware support

- Use-bit set to 0 when page swapped in

- Under demand paging, use-bit changed to 1 due to reference

- Following examples assume that page is referenced for use

- Thus, use-bit is 1 for new pages

- Under prepaging, use-bit may stay 0

3.3.2. Clock (Second Chance): Algorithm

If page hit

- Set use-bit to 1

- Keep pointer unchanged

If page miss

- Check frame at pointer

- If free, use immediately, advance pointer

- Otherwise

- If use-bit is 0, then replace; advance pointer

- If use-bit is 1, reset bit to 0, advance pointer, repeat (Go to “Check frame at pointer”)

- (Naming: In contrast to FIFO, page gets a second chance)

3.3.3. Clock (Second Chance): Animation

- Consider reference of page 7 in previous situation

- All frames full, page 7 not present in RAM

Page miss

![Animation of Clock algorithm for page replacement]()

- Frame at pointer is 2, page 44 has use bit of 1

- Reset use bit to 0, advance pointer to frame 3

- Frame at pointer is 3, page 3 has use bit of 0

- Replace page 3 with 7, set use bit to 1 due to reference

- Advance pointer to frame 4

- Frame at pointer is 2, page 44 has use bit of 1

3.3.4. Clock: Different Animation

- Situation

- Four frames of main memory, initially empty

- Page references: 1, 3, 4, 7, 1, 2, 4, 1, 3, 4

3.4. More Replacement Examples

3.5. Self-Study Task

Perform the following task in Learnweb.

Apply the page replacement algorithms OPT, FIFO, LRU, and Clock (Second Chance) for four frames of main memory to the following stream of page references under demand paging: 1, 3, 4, 7, 1, 2, 4, 1, 3, 4

Verify your results against the previous slide and raise any questions that you may have.

4. Conclusions

4.1. Challenge: Page Table Sizes

E.g., 32-bit addresses with page size of 4 KiB (212 B)

- Virtual address space consists of up to 232 B = 4 GiB = 220 pages

- Every page with entry in page table

If 4 bytes per entry, then 4 MiB (222 B) per page table

Page table itself needs 210 pages/frames! Per process!

Much worse for 64-bit addresses

- E.g., 48 bits, 4 KiB pages, 8 B per entry: 236 * 8 B = 512 GiB

- Virtual address space consists of up to 232 B = 4 GiB = 220 pages

Two approaches to reduce page table sizes

- Multilevel (or hierarchical) page tables

- Tree-like structure, efficiently representing large unused areas

- Root, called page directory

- Entries cover larger address space portions

- Inverted page tables

- Multilevel (or hierarchical) page tables

4.2. SIEVE for Caching

- Page replacement policies also work for caching

- E.g., for web contents or key-value stores

- LRU is highly popular

- (Zhang et al. 2024) presents SIEVE as variant of Clock for caches

- Web page with visualization

- Also with use-bit (“Visited”) and hand/pointer

- Does not replace candidate, instead:

- Remove candidate from cache

- Insert new contents at head of queue

- Superior performance demonstrated

- Adopted in several systems and cache libraries

- Web page with visualization

4.3. Summary

- Virtual memory provides abstraction over RAM and secondary storage

- Isolation of processes

- Paging as fundamental mechanism for flexibility

- Page tables managed by OS

- Hardware support via MMU with TLB

- Management of “necessary” pages is complex

- Tasks include prepaging and page replacement

Bibliography

License Information

Source files are available on GitLab (check out embedded submodules) under free licenses. Icons of custom controls are by @fontawesome, released under CC BY 4.0.

Except where otherwise noted, the work “Virtual Memory II”, © 2017-2025 Jens Lechtenbörger, is published under the Creative Commons license CC BY-SA 4.0.