Computer Architecture

(Usage hints for this presentation)

IT Systems, Summer Term 2026

Dr.-Ing. Matthes Elstermann

1. Introduction

1.1. Today’s Core Question

- How do the previously built chips fit together in a computer architecture?

- Based on Chapter 5 of (Nisan and Schocken 2005)

1.2. Learning Objectives

- Explain von Neumann architecture and discuss its principles, in general and in relation to Hack

- Discuss implications of Moore’s law

- Build, test, and analyze Hack computer

- Trace execution of programs (e.g., program counter, register and memory contents)

1.3. Retrieval Practice

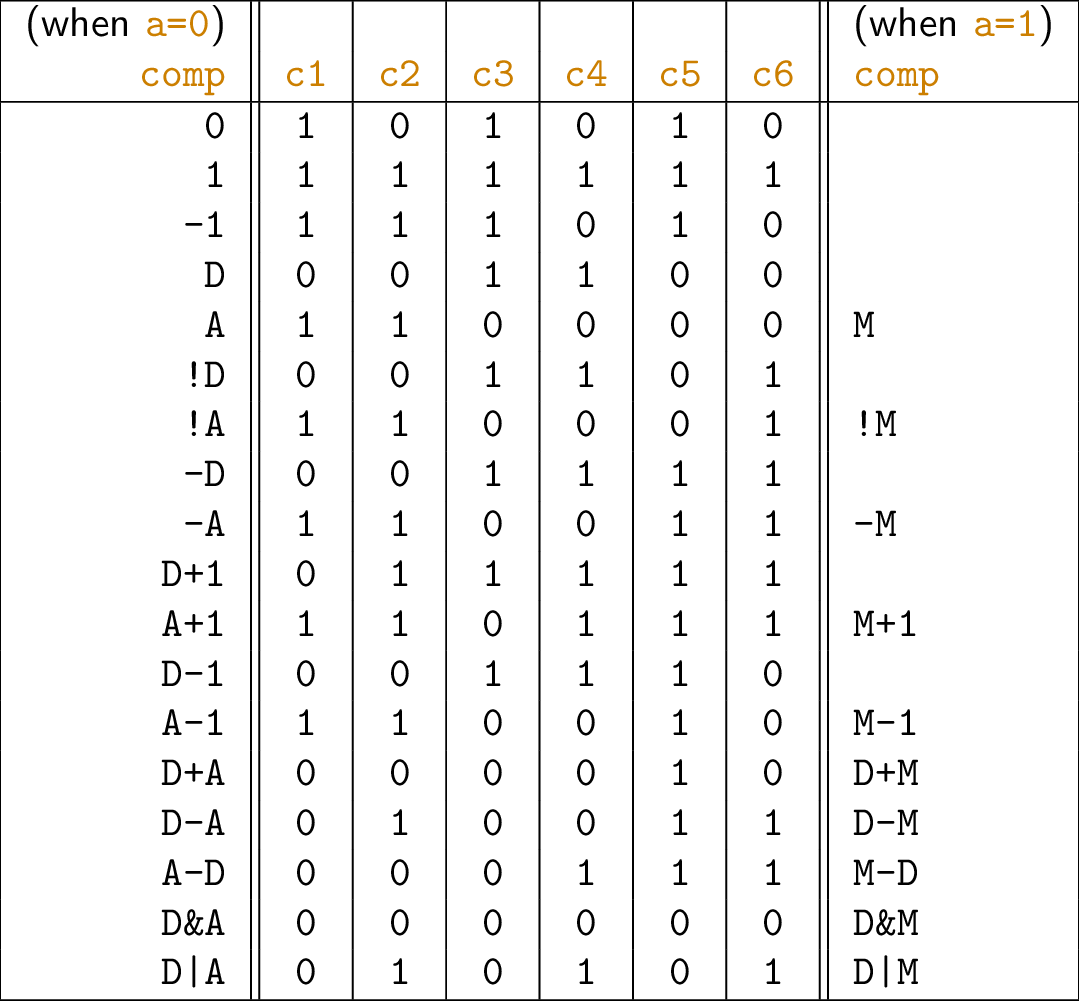

- How to control the ALU?

- What is the Memory Hierarchy?

- What are the major components of the Hack Computer?

- How do binary Hack machine instructions look like?

Agenda

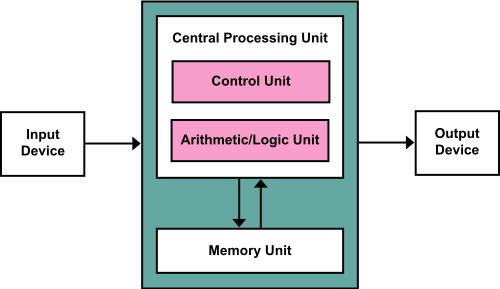

2. Von Neumann Architecture

2.1. Sketch of Von Neumann Architecture

“von Neumann Architecture” by Kapooht under CC BY-SA 3.0; converted from Wikimedia Commons

- Design proposed in (von Neumann 1945)

- Note: ROM is not part of von Neumann architecture

- Actually, Harvard architecture is variant of Hack with separate memories for data and instructions

- Details are not important for us

2.2. Von Neumann Principles

Major principles

Stored program concept

- Instructions stored in memory, just as data, modifiable

- General-purpose, programmable

Single CPU

- Singe-Instruction Single-Data (SISD) principle

- (In contrast to parallelism)

Von Neumann Bottleneck

- Fast CPU fetches every instruction and data over single bus from slow memory

- Memory wall according to (Wulf and McKee 1995)

- CPU speeds increase much more than RAM speeds

- System performance bounded by memory speed

- Caching and

multi-threading as mitigation

- Threads to be explained in OS part

2.3. Fetch-Decode-Execute Cycle

Use program counter to fetch instruction from memory

![von Neumann Architecture]()

Figure under CC BY-SA 3.0

- Control unit decodes bits of instruction to determine operation

- CPU executes operation

- Maybe load data

- Perform (ALU) operation

- Maybe write result

- Update program counter

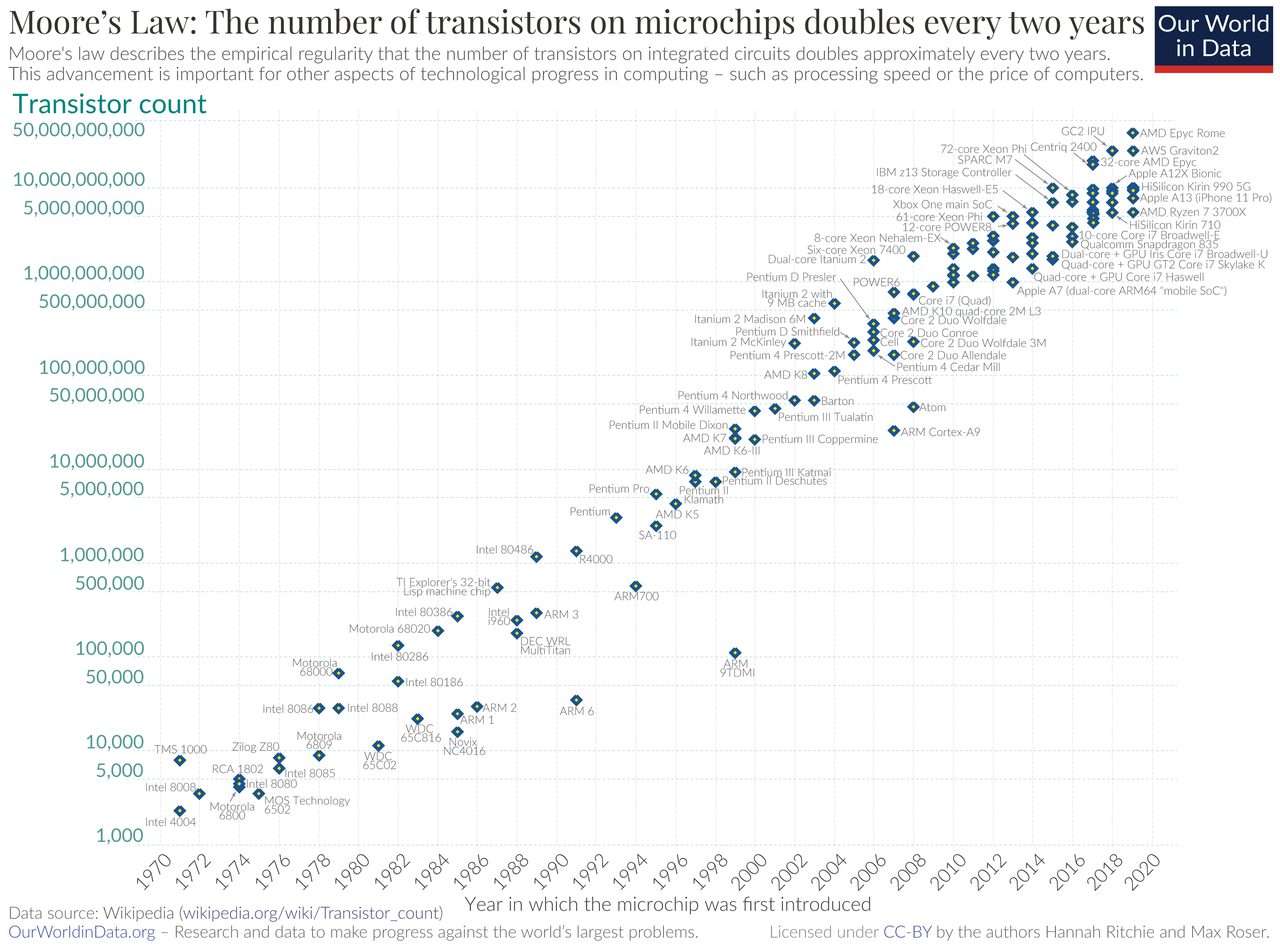

3. Moore’s Law

Published in (Moore 1965)

![Gordon Moore]()

Figure under CC BY-SA 3.0 Deed

“The complexity for minimum component costs has increased at a rate of roughly a factor of two per year […]. Certainly over the short term this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least 10 years. That means by 1975, the number of components per integrated circuit for minimum cost will be 65,000.

I believe that such a large circuit can be built on a single wafer.”

- Later corrected to “double every two years”

- Exponential improvements

3.1. Sample Numbers

“Moore's Law” by Hannah Ritchie and Max Roser under CC BY 4.0; from Wikimedia Commons

3.2. Future Perspectives

Chip’s “speed” doubled every two years

Due to continued miniaturization

Smaller transistors need less power, switch faster

- Until limits of physics reached

- Intel Skylake (2015) transistors are around 100 atoms across

- Leak current, heat, quantum effects

To limit energy usage, only parts of a chip are powered-on

- Powered-off areas referred to as “dark silicon” (Esmaeilzadeh et al. 2011)

Options according to (Lundstrom and Alam 2022) (beyond class)

- 2D nanoelectronics, 3D terascale integration, functional integration

3.3. Parallel Programming

- For decades, individual CPUs became more powerful

- Now, CPUs contain more cores

Need parallel programming to make programs faster

- OS Topics

- Processes and threads

- Processes are programs in execution

- Each process can have multiple threads of execution

- Each core can execute a different thread

- Needs to be programmed

- Processes are programs in execution

- Concurrency and mutual exclusion

- Protect shared data structures (e.g., via locks)

- Otherwise, data structures will be corrupted (cf. locking and update anomalies in database systems)

- Programmers must learn this

- Protect shared data structures (e.g., via locks)

- Processes and threads

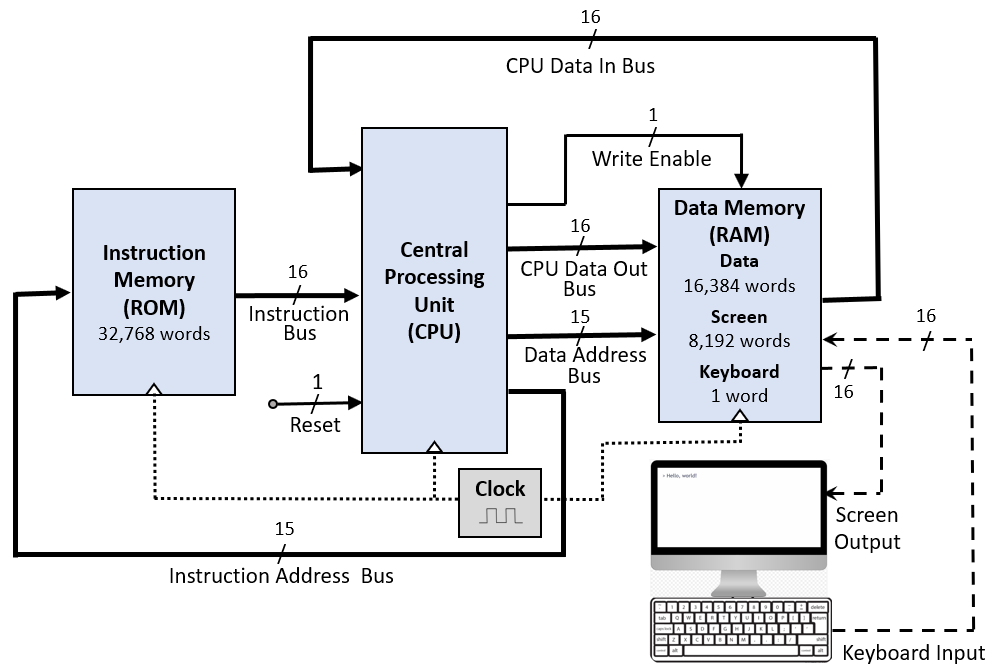

4. Hack Computer

4.1. Hack Input/Output Devices

Recall: Memory-mapped I/O

![Hack Computer]()

“Hack Computer” by user52174 under CC BY-SA 4.0; from StackExchange

4.2. Hack Data Memory

Specification in

Memory.hdlCHIP Memory { IN in[16], load, address[15]; OUT out[16]; Parts: ... }- Output

outwith contents of memory locationaddress - If

loadthen storeinataddress - Different

addressranges- 0-16383: Access to

RAM16K - 16384-24575:

Access to

Screen - 24576:

Access to

Keyboard - Larger values are invalid

- 0-16383: Access to

- Output

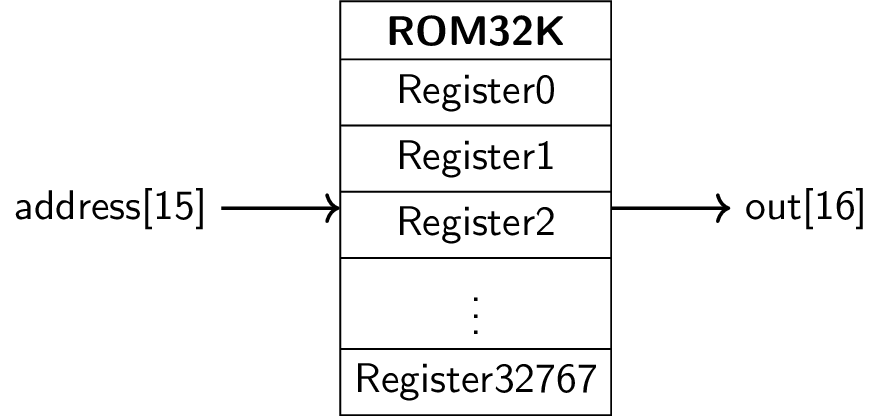

4.3. Hack Instruction Memory

Recall

ROM32K

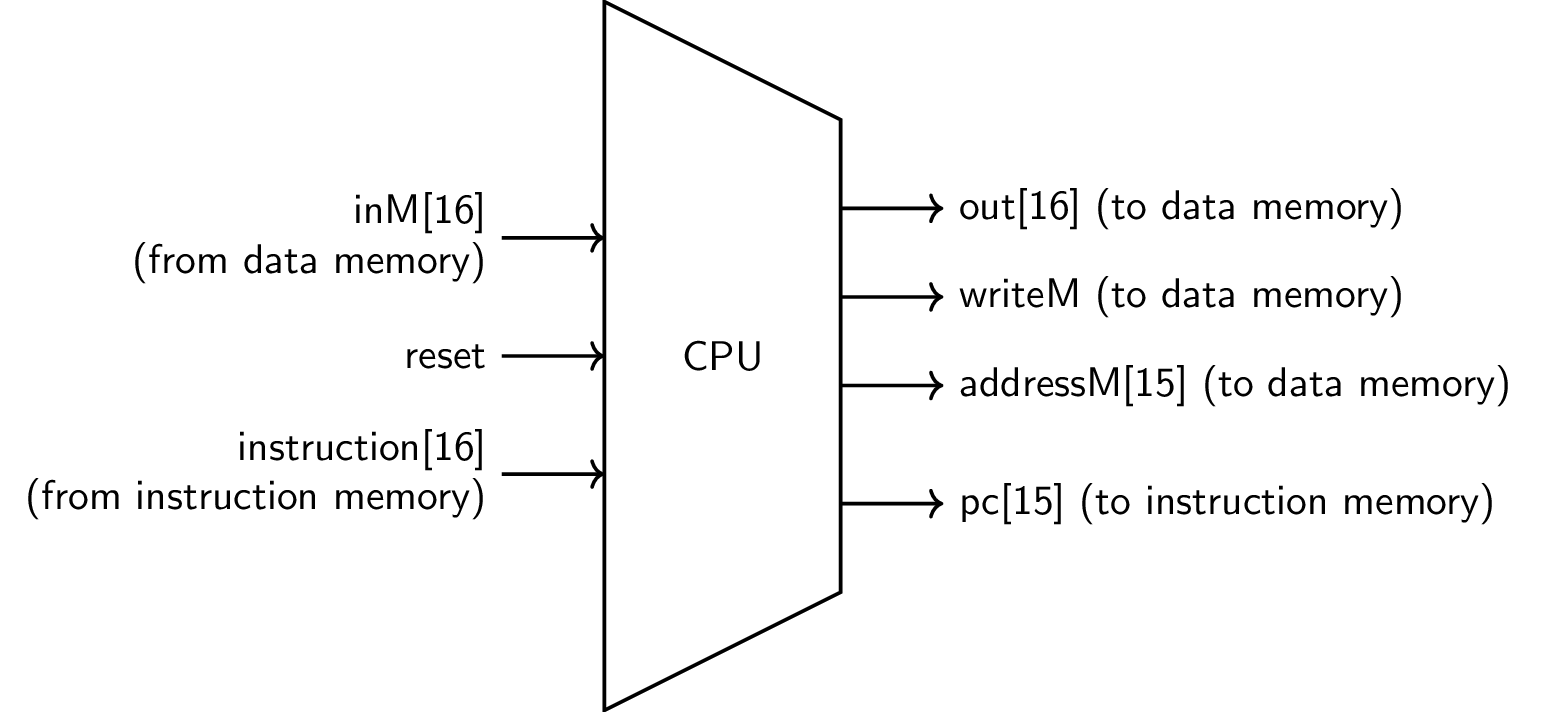

4.4. Hack CPU Chip

Specification via machine language and

CPU.hdlDetails of machine language include:

- Three registers: A, D, PC

- Implicit memory location M (addressed by A register)

CHIP CPU { IN inM[16], // M value input (M = contents of Memory[A]) instruction[16], // Instruction for execution reset; // Signals whether to re-start the current // program (reset=1) or continue executing // the current program (reset=0). OUT outM[16], // M value output writeM, // Write into M? addressM[15], // Address in data memory (for M) pc[15]; // address of next instruction PARTS: ...}

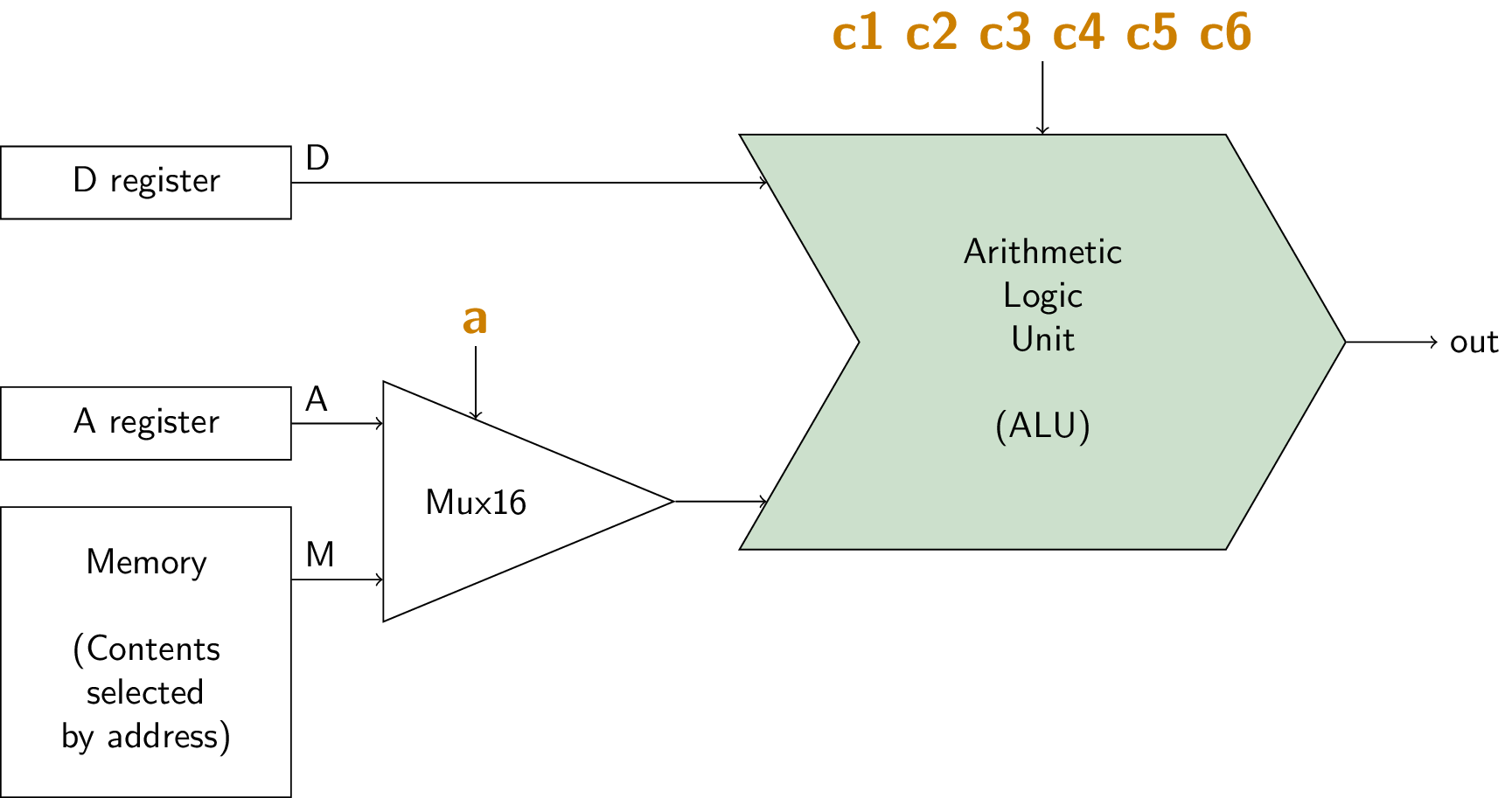

4.4.1. ALU with Registers

- Recall C instruction

- 1 1 1 a c1 c2 c3 c4 c5 c6 d1 d2 d3 j1 j2 j3

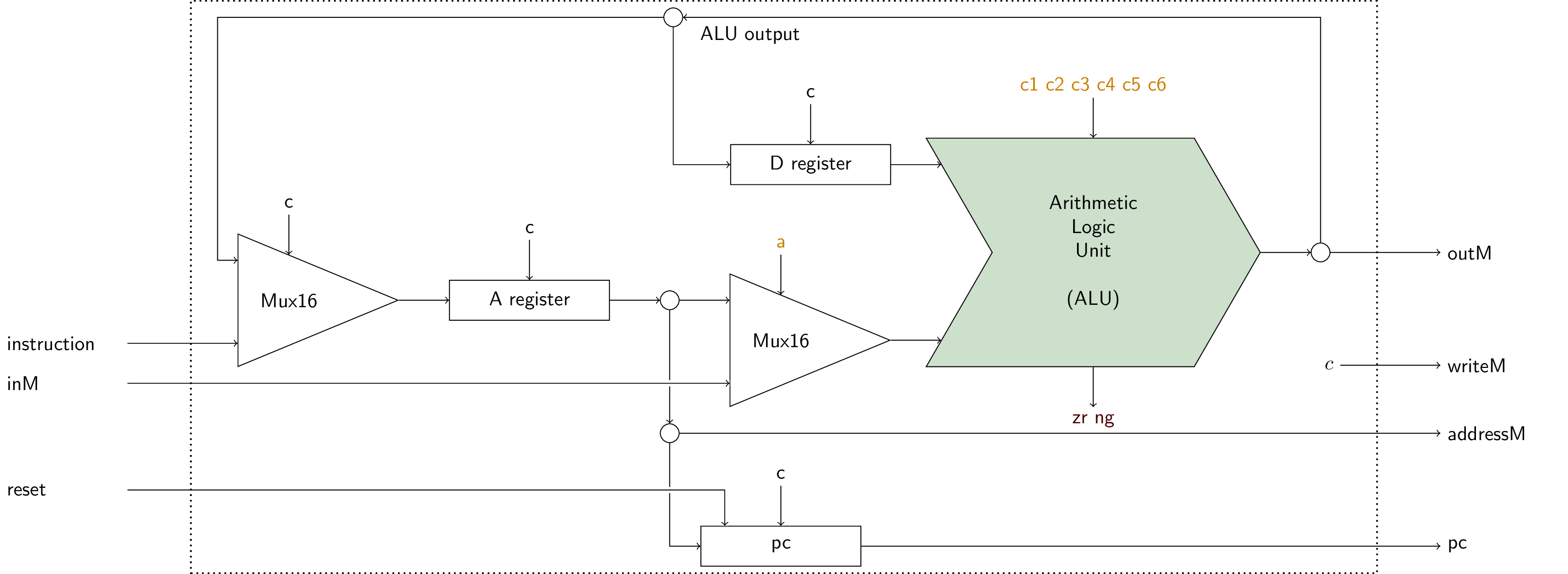

4.4.2. Proposed CPU Implementation

- Incomplete picture

- Several inputs “c” denote control inputs

- E.g., load bits for registers, selector for Mux

- Computed by additional control logic, revisited in class

- Control unit of von Neumann architecture

- Decoding of instruction

- Several inputs “c” denote control inputs

4.5. Hack Computer Chip

Specification in

Computer.hdl![Hack Computer]()

Figure under CC BY-SA 4.0

- Hack computer, including

CPU,Memory(withRAM16K,Screen,Keyboard),ROM32K - Input

resetallows to (re-) start execution of program in ROM

- Hack computer, including

- Build in Project 5

5. Conclusions

5.1. Summary

- Von Neumann architecture as blueprint for programmable,

general-purpose computers

- Bottleneck from memory access, memory wall

- Hack as variant with ROM

- Moore’s law predicts exponential growth of computer power

- Physical limits ahead

- Parallel programming skills required

- Project 5: Hack computer, end of Part 1 of IT Systems

- Few components: CPU, data memory, instruction memory

- CPU executes machine instructions

- Memory-mapped I/O for screen and keyboard

- Few components: CPU, data memory, instruction memory

5.2. Outlook

- In part 2, Operating Systems, we revisit I/O

- Programmed I/O (polling) vs interrupt-driven I/O

- You “polled” when accessing the keyboard in Hack

- Poll: “Has a key been pressed yet?”

- If not, wait in loop → Waste of CPU time

- Poll: “Has a key been pressed yet?”

- Interrupt: Additional input from I/O device to CPU

- Via pin or bus

- Notification to CPU: “Hey, someone pressed key X here. Please act.”

- If no key, no unnecessary processing time

- However, interrupt overhead; to be discussed

- Via pin or bus

- You “polled” when accessing the keyboard in Hack

5.3. Beyond Class

- There is much more to computer architecture, e.g.:

- Optimizations

- Parallelism, e.g., pipelining with speculative execution, hyper-threading, (heterogeneous) multi-core

- Special-purpose processors, e.g., graphics, machine learning, networking

- Variety

- Non-traditional architectures

- E.g., quantum, neuromorphic, adiabatic, biological

- Optimizations

5.4. Self-Study-Tasks

- Taking all other parts for granted, implement

Computer.hdl- Part of Project 5

Bibliography

License Information

Source files are available on GitLab (check out embedded submodules) under free licenses. Icons of custom controls are by @fontawesome, released under CC BY 4.0.

Except where otherwise noted, the work “Computer Architecture”, © 2024-2026 Jens Lechtenbörger, is published under the Creative Commons license CC BY-SA 4.0.